Echocardiography has revolutionized the diagnostic approach to patients with congenital heart disease. A comprehensive cardiovascular ultrasound imaging and hemodynamic evaluation is the initial diagnostic test used in the assessment of any congenital cardiac malformation. Since echocardiography became a part of clinical practice in the 1970s, the technology used for cardiac imaging has been in a nearly constant state of change. New techniques have been introduced at an increasingly rapid pace, especially since the 1990s. In this chapter, we will review the basic physical properties of ultrasound and the primary modalities used in clinical imaging. These discussions will provide an important foundation that will allow us to understand the more advanced methods of imaging and functional assessment, which will be covered in more detail later.

WHAT IS ULTRASOUND?

Diagnostic ultrasound generates images of internal organs by reflecting sound energy off the anatomic structures being studied. An ultrasound imaging system is designed to project sound waves into a patient and detect the reflected energy, then converting that energy into an image on a video screen. The types of sound waves used are given the name “ultrasound” because the frequencies involved are greater than the frequencies of sound that can be detected by the human ear. The average human ear can respond to frequencies between 20 and 20,000 Hz. Therefore, it stands to reason that ultrasound waves have frequencies greater than 20,000 Hz. In clinical practice, most imaging applications actually require frequencies in excess of 1 MHz. Current cardiac imaging systems have the ability to produce ultrasound beams varying between 2 and 12 MHz. A typical diagnostic ultrasound system consists of a central processing unit (CPU), the video image display screen, a hard drive for storage of the digital images, and a selection of transducers. The transducers both transmit and receive ultrasound energy.

Some Definitions

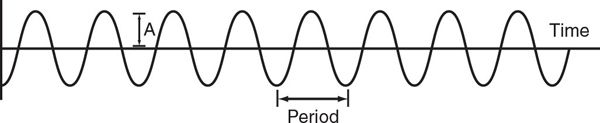

■Sound wave: Series of cyclical compressions and rarefactions of the medium through which the wave travels (Fig. 1.1).

■Cycle: One alternation from peak compression through rarefaction and back to peak compression in a sound wave.

■Wavelength (λ): Distance from one peak (or trough) of the wave to the next (one complete cycle of sound).

■Velocity (v): Speed with which sound travels through a medium. The ultrasound propagation velocity in human tissue is 1540 m/s.

■Period (p): Time duration required to complete one cycle of sound.

■Amplitude (A): Magnitude of the sound wave, representing the maximum change from baseline to the peak of compression or a rarefaction in a cycle.

■Frequency (f): Number of sound cycles occurring in 1 second.

■Power: Rate at which energy is transferred from the sound wave to the medium. This is related to the square of the wave amplitude.

■Fundamental or carrier frequency (f0): Frequency of the transmitted sound wave.

■Harmonic frequency (fx): Sound waves that are exact multiples of the carrier frequency. The first harmonic frequency is twice the frequency of the carrier wave.

■Bandwidth: Range of frequencies that a piezoelectric crystal can produce and/or respond to.

Figure 1.1. Graphic depiction of a sound wave. The portions of the wave above the dashed baseline represent compression of the medium by the energy in the wave. Conversely, the portions of the wave shown below the baseline represent rarefaction. The portion of the wave that lies between one peak and the next, or one valley and the next, is referred to as the period. Wavelength is the distance covered by one period. Amplitude (A) refers to the maximum change from baseline caused by the wave (by either compression or rarefaction).

IMAGE GENERATION

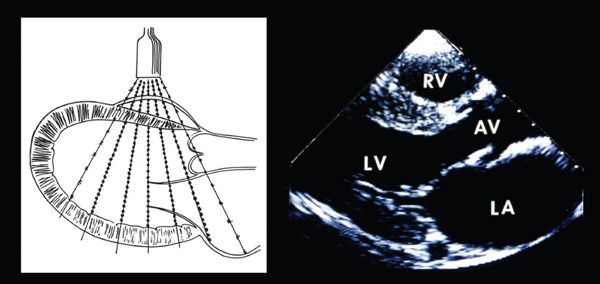

Diagnostic ultrasound imaging relies on the ability of high-frequency sound waves to propagate (travel) through the body and be partially reflected back toward the sound source by target tissues within the patient (Fig. 1.2). The imaging system generates the imaging beam by electrically exciting a number of piezoelectric crystals contained within a transducer. The imaging beam is then focused and projected into the patient. As the ultrasound beam travels through the patient, some of the energy will be scattered into the surrounding tissue (attenuation), and some will be reflected back toward the source by the structures in the beam’s path. These reflected waves will provide the information used to create images of the internal organs. This is the same imaging strategy used in sonar technology to detect objects below the water’s surface. The intensity (amplitude) of the reflected energy wave is proportional to the density of the reflecting tissue (see later). The reflected ultrasound energy induces vibrations in the transducer crystals and an electric current is created. This current is sensed by the CPU and converted into a video image.

The ultrasound image generation is based primarily on the amount of energy contained in the reflected wave and the time between transmission of the ultrasound pulse and detection of the reflected waves by the transducer crystals. The interval between transmission and detection of the reflected waves is referred to as “time of flight.” The depth at which the ultrasound image is displayed is determined by this time interval. Reflections from structures in the far field take longer to return to the transducer than do reflections from objects close to the sound source. This time interval is sensed by the CPU and directly converted into distance from the sound source based on the speed of ultrasound propagation within tissue.

The energy contained within the reflected waves is related to the amplitude of those waves. The amplitude of the reflected waves can be measured based on the amount of electric current produced by the receiving crystals. The brightness of the image created by the ultrasound system is determined by the amplitude of the reflected waves. Bodily fluids, such as blood, effusions, and ascites, will transmit nearly all of the energy contained within the imaging beam. Because there is little reflected energy, these areas are displayed as black (or nearly black) on the imaging screen. Air is not dense enough to allow transmission of sound frequencies in the ultrasound range. Therefore, all of the imaging energy present in the beam will be reflected at an air–tissue interface, such as at the edge of a pneumothorax or of the normal lung. This nearly 100% reflection is translated into a very bright (usually white) representation on the imaging screen. Other very dense tissues, like bone, will also reflect virtually all of the energy and be displayed as very bright echo returns. Structures beyond these very bright “echoes” cannot be displayed, because no ultrasound energy reaches them. These areas are often referred to as acoustic shadows. Fat, muscle, and other tissues will transmit some of the imaging beam and reflect a fraction of the sound wave. The amount reflected is related to the density of the tissue, and the amount of returning energy sensed by the crystals in the transducer will determine how brightly an image will be displayed on the video screen.

Figure 1.2. Ultrasound transducer transmitting a plane of ultrasound through a heart in a parasternal, sagittal, or long-axis plane (left). The myocardial and valvar structures reflect the ultrasound energy back to the transducer. The crystals within the transducer detect the returning energy, and the processors within the ultrasound system quantitate the intensity of the reflected waves and the time required for the ultrasound energy to travel from the transducer to the reflector and back. The intensity of the returning signal determines the brightness of the display (right), and the time defines the depth at which the signal is displayed. The central processing unit filters and then converts this information into a video display (right), which corresponds to the anatomy encountered by the plane of sound as it traversed the chest. AV, aortic valve; LA, left atrium; LV, left ventricle; RV, right ventricle.

During a clinical examination, echocardiographers are usually less conscious of amplitude than they are of the frequency of the transmitted sound beam. The frequency of the ultrasound waves has a tremendous impact on the ability to produce images of anatomic structures. The greater the frequency, the greater is the resolution of the resulting image. However, high-frequency sound beams lose more of their energy to surrounding tissues (attenuation) and, therefore, do not penetrate human tissue as well as low-frequency beams. Thus, the echocardiographers must always balance penetration (lower frequencies) and resolution (higher frequencies). For example, the heart of an adult patient will have structures that are positioned farther from the transducer than they are in the heart of a child. Therefore, lower imaging frequencies are usually required to produce adequate images in older patients.

The advent of harmonic imaging has significantly enhanced the ability to examine these older patients with surface echocardiography. Human tissue is not homogeneous in character. As a result, when an imaging beam is reflected by the target, the reflected sound energy exists not only in an unaltered state but also in multiples of the carrier frequencies (harmonic waves). Modern ultrasound transducers now have sufficiently broad bandwidths to vibrate not only at the carrier or fundamental frequency but also at the first harmonic frequency of the transmitted wave. The first harmonic frequency has twice the number of alternating cycles of compression/rarefaction relative to the transmitted frequency. For example, the first harmonic frequency of a 4-MHz ultrasound beam will be 8 MHz. This allows the ultrasound system to transmit at a relatively low frequency but to detect (to image with) reflected waves of a much higher frequency than the original wave. Thus, harmonic imaging combines the advantages of low-frequency transducers (penetration) with the improved resolution associated with higher-frequency imaging.

COMMON IMAGING FORMATS AND IMAGING ARTIFACTS

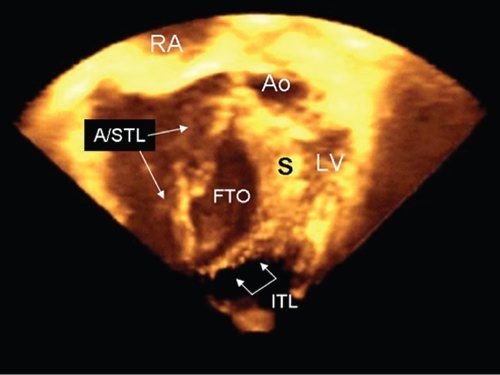

The earliest echocardiograms displayed either the amplitude or the brightness of the reflected waves on an oscilloscope. These were referred to as either A-mode (amplitude) or B-mode (brightness) echocardiograms. When video screens were linked to echocardiographic systems, it became possible to display the information in “real-time.” These “echocardiograms in motion” were referred to as motion mode studies, or M-mode. M-mode scans display the brightness of the ultrasound reflections, as well as the distance from the transducer and the time at which the reflection occurs. This allows the examiner to visualize cardiac activity as it occurs. M-mode scanning interrogates targets along a single line within the patient. Advances in transducer construction, image processing, and video display allowed multiple M-lines to be fused into a sector of scanning, including usually 80 to 90 degrees of arc. Two-dimensional sector scanning produced a “flat” tomographic display of the areas being interrogated. Two-dimensional imaging remains the primary imaging modality in modern anatomic echocardiography (see Fig. 1.2). However, additional advances in transducer and image processing capabilities now allow real-time volumetric three-dimensional interrogation of the cardiovascular system (Fig. 1.3). As this technology continues to progress, it is likely to, once again, revolutionize the way in which echocardiographic data are acquired.

Figure 1.3. Improved transducer and processing capabilities allow ultrasound systems to scan in “volumes” of interest rather than just in planes. This image was obtained during real-time three-dimensional imaging. The patient had significant Ebstein malformation. The transducer was positioned at the right ventricular apex, and the resulting volume of sound was cropped to display the ventricular cavity at the level of the functional tricuspid orifice (FTO). The abnormalities of the tricuspid valve leaflets are clearly seen. The large anterior leaflet and remnant of the septal leaflets (A/STL) are highlighted (long arrows), and the smaller inferior tricuspid leaflet (ITL) can be seen parallel to the diaphragm (short connected arrows). Ao, aorta; LV, left ventricle; RA, right atrium; S, ventricular septum.

Ultrasound, like any imaging technique, will occasionally produce an erroneous image. These are referred to as artifacts. The echocardiographer must be aware of these imaging anomalies to avoid misinterpretation of the images. Image formation depends on the reflection of ultrasound energy. As a result, the most common artifact encountered is due to the fact that structures that lie parallel to the beam of sound produce no return. The structures do not appear on the video image. This is referred to as parallel dropout. Imaging areas from multiple angles of interrogation is a way to avoid this problem. Very dense, and therefore bright, structures produce echocardiographic shadows that lie beyond the intense return and parallel to the plane of sound. This shadowing reduces or eliminates the information obtainable in these areas. Imaging from multiple windows and angles of interrogation is the most effective strategy when faced with such shadows. In extreme cases, such as shadowing caused by a prosthetic valve, the transducer may need to be placed posterior to the heart to avoid the shadow. These are situations in which transesophageal echocardiography can be extremely useful. Echo-dense structures can also distort the image lateral to the bright reflector. This occurs due to scattering of the ultrasound energy in nonparallel directions to the original beam. This distortion has been referred to as side-lobing. This effect can artificially broaden the appearance of a bright structure, such as a calcified valve or thickened pericardium. Enhanced focusing and filtering capabilities have significantly reduced this issue in modern equipment. Other unusual echo returns can be encountered. These returns often have an arc-like appearance on the video screen. Alterations in frequency, depth of image, or video frame rate will frequently eliminate these returns from the image, confirming their artifactual nature.

THE DOPPLER EFFECT AND CARDIOVASCULAR HEMODYNAMICS

Structures of interest to the echocardiographer are generally not stationary. It is well known that objects in motion reflect sound energy differently than do objects at rest. When an energy wave reflects off or is produced by a moving target, the frequency of the resulting wave is altered based on the direction and speed of the target. This phenomenon was first described by Austrian scientist Christian Doppler in 1843 while he was studying distant stars. Doppler found that the change (or shift) in the frequency of the wave produced or reflected by an object in motion is directly proportional to both its speed and the direction of the motion relative to the observer. This shift has become known as the Doppler effect in honor of its discoverer. A classic example of the Doppler effect is the change in the perceived pitch of a train horn as it approaches and then passes by a stationary observer. The train’s horn produces a sound of a single, constant pitch, defined by the frequency of the sound waves. However, when the train is in motion, the frequency/pitch that will be “heard” by the observer will be greater or less than the transmitted frequency, depending on the direction of motion. If the train is traveling toward the observer, the perceived frequency is greater than the transmitted frequency (more cycles per second). Conversely, the pitch is lower than the transmitted frequency if the train is moving away from the observer.

These shifts in frequency occur because targets in motion toward an observer will physically encounter and reflect the wave more often than will a stationary target. This increase in “encounter rate” compresses the reflected wave and thereby increases the number of cycles per second in the “reflection,” increasing the frequency (Fig. 1.4). If the target is moving away from the observer, the energy wave will encounter the target, and be reflected, less often (Fig. 1.5

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree