The ability to integrate echocardiographic for rheumatic heart disease (RHD) into RHD prevention programs is limited because of lack of financial and expert human resources in endemic areas. Task shifting to nonexperts is promising; but investigations into workforce composition and training schemes are needed. The objective of this study was to test nonexperts’ ability to interpret RHD screening echocardiograms after a brief, standardized, computer-based training course. Six nonexperts completed a 3-week curriculum on image interpretation. Participant performance was tested in a school-screening environment in comparison to the reference approach (cardiologists, standard portable echocardiography machines, and 2012 World Heart Federation criteria). All participants successfully completed the curriculum, and feedback was universally positive. Screening was performed in 1,381 children (5 to 18 years, 60% female), with 397 (47 borderline RHD, 6 definite RHD, 336 normal, and 8 other) referred for handheld echo. Overall sensitivity of the simplified approach was 83% (95% CI 76% to 89%), with an overall specificity of 85% (95% CI 82% to 87%). The most common reasons for false-negative screens (n = 16) were missed mitral regurgitation (MR; 44%) and MR ≤1.5 cm (29%). The most common reasons for false-positive screens (n = 179) included identification of erroneous color jets (25%), incorrect MR measurement (24%), and appropriate application of simplified guidelines (39.4%). In conclusion, a short, independent computer-based curriculum can be successfully used to train a heterogeneous group of nonexperts to interpret RHD screening echocardiograms. This approach helps address prohibitive financial and workforce barriers to widespread RHD screening.

Echocardiography has emerged as the most sensitive tool to find children living with latent rheumatic heart disease (RHD), yet, integration of echocardiographic screening into public health programming has not occurred. The severe shortage of specialized health care providers in RHD endemic areas remains one of the major barriers to implementation. Task shifting of echocardiographic screening to nonexperts represents a promising solution. Early data show nonexperts can achieve reasonable sensitivity and specificity given focused training and simplified echocardiographic screening protocols with both standard and handheld echocardiography machines. However, to scaleup the use of nonexperts for RHD screening, standardized training and competency testing is urgently needed. The objective of this study was to test the ability of nonexperts with a variety of echocardiography experience to interpret RHD screening echocardiograms using handheld echocardiography after a brief, asynchronous, standardized, computer-based training course on image interpretation.

Methods

This study was conducted in Belo Horizonte, Brazil, in the context of an existing school-based RHD screening program (Programa de RastreamentO da VAlvopatia Reumática [PROVAR]). Ethical approval was obtained from Comitê de Ética em Pesquisa, Universidade Federal de Minas Gerais, and Children’s National Health System. Informed consent was obtained from each participant, and the study protocol conformed to the ethical guidelines of the Declaration of Helsinki.

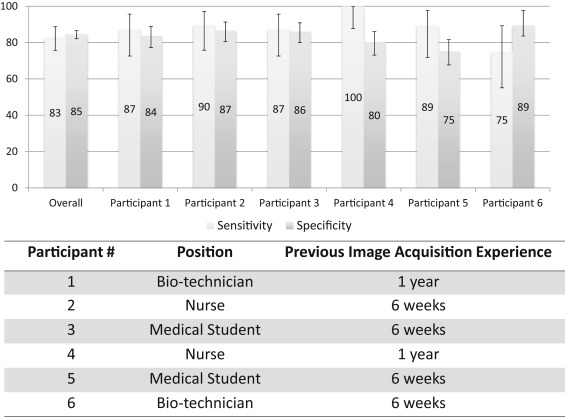

Six nonexperts, already selected for participation in the PROVAR program, with a variety of health care backgrounds (2 nurses, 2 biotechnicians, and 2 medical students) and practical experience obtaining echocardiographic images (4 with 6 weeks and 2 with 1 year) participated. The 2 participants with 1 year of experience were acquiring images using standard portable echocardiography equipment during the previous year (each with 300 to 500 examinations) but not interpreting the studies. The 4 participants with 6 weeks of image acquisition experience had recently joined PROVAR and had just completed an observational and hands-on training on acquiring echocardiographic images with approximately 60 hours of training over 6 weeks. This training did not include image interpretation or field experience.

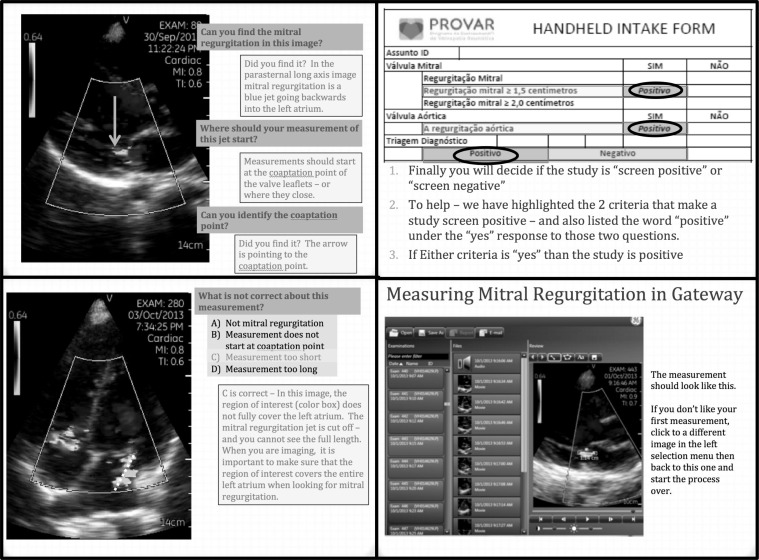

A previously published simplified RHD screening protocol was taught and participants preformed limited echocardiograms and interpreted them as screen positive or negative. Briefly, mitral regurgitation (MR) ≥1.5 cm and/or the presence of any aortic regurgitation (AR) was considered screen positive. A 3-week self-directed educational period was followed by field-testing of school-based echocardiographic screening using handheld echocardiography (GE Vscan, Milwaukee, Wisconsin).

Our educational program focused on self-directed, computer-based modules translated into Portuguese that could be completed asynchronously at the participants’ convenience without support from expert staff. Lessons were assigned for 3 consecutive weeks. Midweek, participants received a personalized quiz link through their email, including 25 to 50 multiple choice and true–false questions generated using the survey feature of REDCap, an electronic data capture tool hosted at Children’s National Health System. Participants received scores through their email within 24 hours of quiz completion, and if they scored <85%, they were asked to recomplete the week’s educational module.

During week 1, we used 6 of the WiRED International RHD “Nurse Training Modules” which are a freely available web-based curriculum ( http://www.wiredhealthresources.net ), which focused on the background of RHD screening, the 2012 World Heart Federation (WHF) criteria, and measurement of mitral and AR. Education during weeks 2 and 3 used self-guided PowerPoint presentations and an image library, a file of handheld echocardiographic studies through cloud image sharing and access to proprietary software program (General Electric Gateway, Milwaukee, Wisconsin) that allowed them to interact with the images to freeze, play, scroll, and perform measurements ( Figure 1 ). No hands on practical application or supervised screening of patients for RHD diagnosis occurred.

Five public schools were selected for testing sites (2 primary and 3 secondary schools). All students attending these schools had previously received RHD education and been invited to participate in RHD screening through the ongoing school-based program.

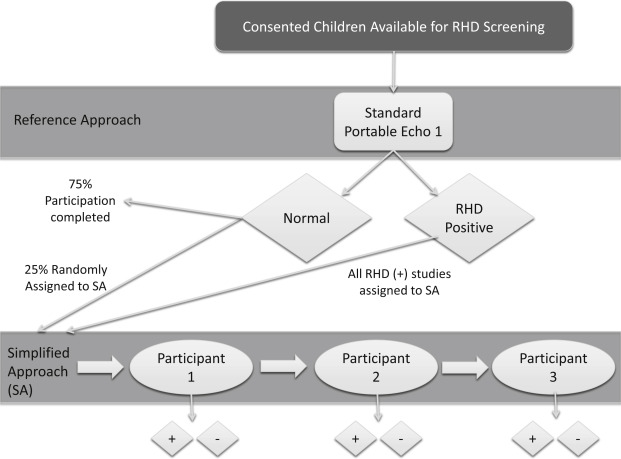

Nonexpert participants were divided into 2 teams of 3, and paired with 2 cardiologists at parallel sites. The simplified approach—a nonexpert user obtaining and interpreting images using handheld echocardiography was compared with the reference approach—a cardiologist obtaining images with a standard portable echocardiography machine (Vivid-Q; General Electric, Vivid-Q, Milwaukee, Wisconsin) and interpreting images according to the 2012 WHF criteria. Screening occurred for 4 days, with each nonexpert user attempting to compete 50 screening echocardiograms each day, for a minimum of 200 children screened per nonexpert participant ( Figure 2 ). Nonexpert participants were blinded as to the reason (random assignment or positive screen) that children were sent for handheld evaluation and to each other’s evaluations. After each echocardiographic study, participants completed a study form to indicate if MR ≥1.5 cm, MR ≥2.0 cm, and/or any AR were seen. They then indicated if the screening examination was positive or negative. Data on MR ≥2.0 cm were collected to compare the sensitivity to the lower screening cutoff of 1.5 cm.

Study data were collected and managed using the REDCap electronic data capture system hosted at Children’s National Medical Center. Number of attempts to pass each quiz was recorded and an electronic questionnaire after assessed opinions on the content, length, format, and confirmed level of comfort with image interpretation after the training period.

The demographic and echocardiographic characteristics of children who underwent screening were described using mean and SD or proportions where appropriate. Sensitivity and specificity, with 95% CIs, were calculated for appropriate identification of RHD (borderline or definite RHD) using modified handheld criteria (MR ≥1.5 cm or any AR) and for more stringent criteria (MR ≥2.0 cm). The sensitivity and specificity of each nonexpert was also independently calculated. Reasons for nonagreement were determined through retrospective review of handheld images and study intake forms and reported as percentage by category. All data were analyzed using MedCalc for Windows version 12.2 (MedCalc Software, Ostend, Belgium).

Results

All 6 participants completed the educational modules and quizzes in the time allotted. For quiz 1 and 3, there was 100% passage on the first attempt. Lower passage rates and need for repeat module completion was seen for quiz 2, with 2 participants requiring 2 attempts and 1 participant requiring 4 attempts to pass. A breakdown of questions missed revealed the higher first-time failure rate was secondary to the learning curve associated with hands on use of the software (Vscan Gateway; General Electric; Milwaukee, Wisconsin) and first experience with image interpretation. Five of 6 participants completed a feedback questionnaire. Results were universally positive, with an average score of 4.6 for likability of the module format, 4.8 for confidence in diagnosing screen positive versus screen negative patients, and 4.2 for comfort with initiation of screening without expert oversight. Participants considered the best part of the modules included, “being able to interact with real echocardiograms,” “ability to complete the lessons on our own time,” and “interesting content and clear explanations.” When asked the worst part of the modules, responses included, “too repetitive” and that “images were too good—did not reflect reality.” Finally, in giving overall feedback about their experience 2 representative comments included, “It’s a very interesting way to teach, especially to people all over the world” and “The modules are excellent and self-explanatory, in an understandable language and all very well detailed.”

Screening was performed using the reference approach in 1,381 children, mean age 13.6 years (SD ± 2.8), 60% female. A total of 397 children were referred to the handheld arm (209, team 1 and 188, team 2), including 53 cases of identified RHD (47 borderline RHD and 6 definite RHD), 336 with normal reference echocardiograms, and 8 other diagnoses (random assignment based on study identification) including one large atrial septal defect, referred for closure ( Table 1 ).

| Demographics (n=397) | |

|---|---|

| Age (years) | Range 5-18 Mean 13.9 (+/- 2.6) |

| Female | 49.1% |

| Diagnosis ∗ | |

| Normal | 336 (84.6%) |

| Borderline rheumatic heart disease | 47 (11.8%) |

| Definite rheumatic heart disease | 6 (1.6%) |

| Other | 8 (2%) |

| Functional Valve Characteristics ∗ | |

| Mitral Regurgitation | |

| Any | 141 (35.5%) |

| 1.5-1.9cm | 18 (4.5%) |

| ≥2cm | 60 (15.1%) |

| Mitral Stenosis | 0 |

| Aortic Regurgitation | |

| Any | 16 (4.0%) |

| ≥1cm | 10 (2.5%) |

| Aortic Stenosis | 0 |

| Mixed Aortic and Mitral Valve Disease | 1(0.2%) |

Combined, the participants had a sensitivity of 83% (95% CI 76% to 89%) and a specificity of 85% (95% CI 82% to 87%) for detecting any (borderline or definite) RHD. Among individual trainees, sensitivity ranged from 75% to 100% and specificity from 75% to 89% ( Figure 3 ). Overall sensitivity exclusively for detection of definite RHD was 94% (95% CI 71% to 100%) although there were only 6 cases, with RHD being identified 17/18 times (3 scanners). If the 73 studies that were correctly identified as screen positive by the simplified approach (MR ≥1.5 cm and or any AR) but counted as false positive according to the reference approach (reference approach MR ≥2.0 cm and/or AR ≥1.0 cm) were excluded, then the overall specificity increases to 97% (95% CI 96% to 98%). Table 2 lists the reasons for false-negative (n = 16) and false-positive (n = 179) screens. Although our study was not powered to detect differences in type of user or duration of experience, no notable differences were seen ( Figure 3 ).