CHAPTER 134 Quality Improvement and Risk Stratification for Congenital Cardiac Surgery

Over the past 5 decades, tremendous progress had been made in the diagnosis and treatment of patients with congenital cardiac malformations. Survival is now expected for many patients who have lesions previously considered untreatable. In recent years, substantial efforts have been made by many congenital cardiac surgeons to standardize and improve the methodologies for the analysis of outcomes following the treatment of patients with congenital cardiac disease.1–119 The rationale for these efforts is to develop universally accepted tools to define and measure outcomes, and to set standards to benchmark results. The resultant data can be used to change and improve our current practice and these results. The fundamental objective of these efforts is to assess the quality of health care delivered by the professionals caring for patients with congenitally malformed hearts and to improve this quality of care. The key tool necessary to accomplish this objective is the multi-institutional database designed to facilitate the analysis of outcomes.

Events related to hospitals in Bristol, England,121 Denver, Colorado,122–128 and Winnipeg, Canada,129 have clearly demonstrated the importance of physician-driven analysis of outcomes. For example, the Bristol Report presents the results of an inquiry into the management of the care of children receiving complex cardiac surgical services at the Bristol Royal Infirmary between 1984 and 1995, and relevant related issues. Approximately 200 recommendations are made in the Bristol report, many of which relate to the need for accurate multi-institutional outcomes databases to quantitate outcomes of care rendered to patients with congenital cardiac disease. Perhaps less well known than the Bristol Report, the Report of the Manitoba Pediatric Cardiac Surgery Inquest presents data from an inquest involving 12 children who died while undergoing, or soon after having undergone, cardiac surgery at the Winnipeg Health Sciences Centre in 1994. Clearly, these events demonstrate the importance of a meaningful and fair method of multi-institutional analyses of outcomes for congenital cardiac surgery.

NOMENCLATURE

During the 1990s, both the Society of Thoracic Surgeons (STS) and the European Association for Cardio-Thoracic Surgery (EACTS) created databases to assess the outcomes of congenital cardiac surgery.8 Beginning in 1998, these two organizations collaborated to create the International Congenital Heart Surgery Nomenclature and Database Project.6 By 2000, a common nomenclature, along with a common core minimal data set, were adopted by EACTS and STS and published in the Annals of Thoracic Surgery.6 Also in 2000, the Association for European Paediatric Cardiology published, in Cardiology in the Young, an international system of nomenclature for congenital cardiac disease named the European Paediatric Cardiac Code.4,5,49,50

The developers of these two systems of nomenclature view these initiatives as complementary and not as competitive. Consequently, on Friday, October 6, 2000, the International Nomenclature Committee for Pediatric and Congenital Heart Disease was established.49,50,55 In January, 2005, this International Nomenclature Committee was constituted in Canada as the International Society for Nomenclature of Paediatric and Congenital Heart Disease. The working component of this international nomenclature society has been the International Working Group for Mapping and Coding of Nomenclatures for Paediatric and Congenital Heart Disease, also known as the Nomenclature Working Group. By 2005, the Nomenclature Working Group cross-mapped the nomenclature of the International Congenital Heart Surgery Nomenclature and Database Project of EACTS and STS with the European Paediatric Cardiac Code of the Association for European Paediatric Cardiology, and thus created the International Paediatric and Congenital Cardiac Code (IPCCC), which is available for free download from the internet (www.IPCCC.NET).

DATABASE STANDARDS

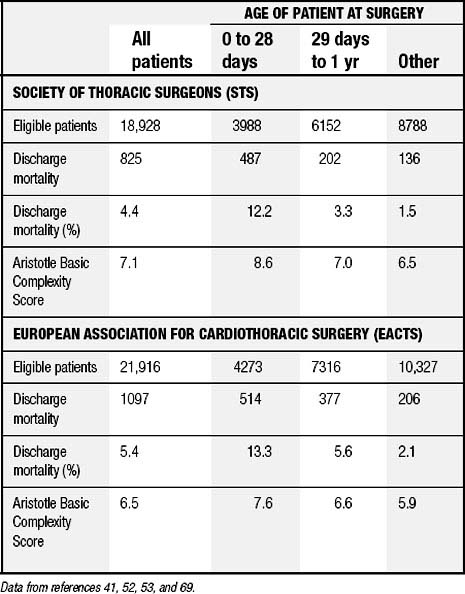

The IPCCC and the common minimum database data set created by the International Congenital Heart Surgery Nomenclature and Database Project are now used by both STS and EACTS.58,63,69 From 1998 through 2007, this nomenclature and database were used by both of these organizations to analyze outcomes of over 150,000 patients undergoing surgical treatment for congenital cardiac disease.76,82 Table 134-1 shows data culled from an analysis of over 40,000 patients undergoing surgery from 1998 through 2004.69 Many publications generated from these two databases have reported outcomes after treatment for congenital cardiac disease in general, as well as outcomes for specific lesions.58,63,69,85

Table 134–1 Aggregate Data from STS and EACTS for SurgeryPerformed between 1998 through 2004, Inclusive

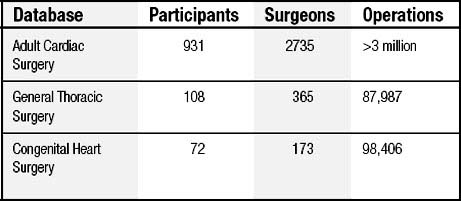

The STS database is the largest clinical cardiothoracic surgical database in North America,88 and it includes (as of August 8, 2008) 1111 participating sites with 3273 participating surgeons (Table 134-2).

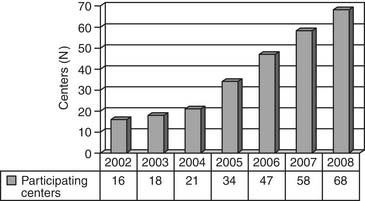

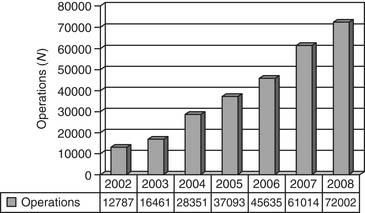

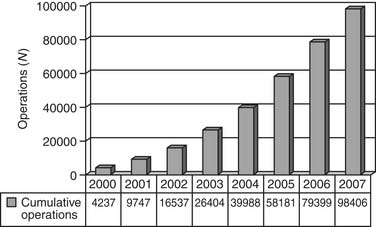

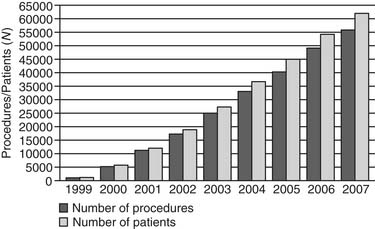

The Report of the 2005 STS Congenital Heart Surgery Practice and Manpower Survey, undertaken by the STS Workforce on Congenital Heart Surgery, documented that 122 centers in the United States and eight centers in Canada perform pediatric and congenital heart surgery.130 As of June 2008, the STS Congenital Heart Surgery Database contains data from 68 of these 130 centers from North America, and it is now the largest database in North America dealing with congenital cardiac malformations. It has grown annually since its inception, in terms of both the number of participating centers submitting data and the number of operations analyzed (Figs. 134-1, 134-2, and 134-3). As of August 2008, the entire STS Congenital Heart Surgery Database contained data from 98,406 operations. The aggregate spring 2008 report from this database included data from the 4-year window of data harvest beginning January 1, 2004, and ending December 31, 2007, and included 72,002 operations submitted from 68 centers in North America (67 in the United States and one in Canada). One Japanese center also submits data, but these data are not included in the aggregate STS report.

By January 1, 2008, the EACTS Congenital Heart Surgery Database contained 61,750 operations performed on 53,402 patients, including 12,109 operations on neonates, 20,487 on infants, 25,102 on children, and 4052 on adults (Fig. 134-4). The EACTS congenital registry continues to grow and has recently shown between 5000 and 10,000 new operations each year. This registry includes 274 units from 62 countries. Participants have access to over 300 on-line reports.

STRATIFICATION OF COMPLEXITY

The importance of the quantitation of complexity is that, in the field of pediatric cardiac surgery, analysis of outcomes using raw measurements of mortality, without adjustment for complexity, is inadequate. The mix of cases can vary greatly from program to program. Without stratification of complexity, the analysis of outcomes will be flawed. Two major multi-institutional efforts that have attempted to measure the complexity of congenital heart surgery are the Risk Adjustment for Congenital Heart Surgery-1 (RACHS-1) system,∗ and the Aristotle Complexity Score† The EACTS and STS congenital databases have included the Aristotle Complexity Score in their reports since 2002.41,52,53,59,72,78,86 In 2006, both databases also incorporated the RACHS-1 method into their reports.72,78,86

To understand the RACHS-1 system and the Aristotle Complexity Score, one must first understand the following methods of adjusting for case mix100: (1) stratification, (2) direct standardization, (3) regression analysis (i.e., risk modeling), and (4) indirect standardization. All four of these methods are considered forms of risk adjustment; however, only the third and fourth are considered risk modeling.

Stratification, also known as complexity stratification and risk stratification, is a method of adjusting for case mix in which patients are divided into relatively homogeneous groups called strata.100 Comparisons of outcomes can then be made separately in each stratum. The goal is to ensure that comparisons are always performed on comparable patients. If patients in a stratum have a similar risk for adverse outcomes, then patients in the same stratum at different hospitals should be comparable. Although stratification is simple to explain and interpret, it does have limitations in its ability to adjust for case mix. First, the categorization of continuous variables is arbitrary. Second, stratification can adjust for only a small number of confounding variables. Finally, stratification controls only for differences in the variables that were used to create the strata. Other variables that are not used to create the strata may also have an impact on outcomes.

Direct standardization is a technique that can be used to compensate for the challenge of analyzing each stratum separately. With direct standardization, one calculates the stratum-adjusted standardized rate of mortality, combining multiple individual stratum-specific estimates into a single number. The adjusted standardized rate of mortality “is the rate of mortality that would be observed at a hospital if all of the hospital’s stratum-specific rates of mortality remained the same but the proportion of patients in each stratum was altered to reflect a ‘standard’ case-mix in some reference population.”100 Direct standardization documents what the outcomes of each hospital would be if that hospital performed surgery on the entire population in the database while their stratum-specific rates of mortality remained the same.100

Regression analysis, also known as regression modeling and risk modeling, is a method of adjusting for case mix in which a mathematical equation is developed that predicts an individual patient’s risk of experiencing an event, such as mortality, based on clinically relevant variables, such as age, weight, and cardiac diagnosis.100 The choice of clinically relevant variables may be based on judgment or may be determined empirically from a large data set. Regression modeling is often used rather than stratification or direct standardization when the analysis necessitates adjustment for several confounder variables simultaneously.

Indirect standardization uses regression analysis and calculates “a predicted probability of the outcome for each patient within a hospital, summing these probabilities to determine the hospital’s expected number of outcomes, and finally comparing the observed number of outcomes to the expected number. The ratio of the observed to expected numbers of outcomes is often called the ‘observed-to-expected ratio,’ and is commonly termed the O/E ratio.”100 The O/E ratio is calculated with the following formula:

Table 134-3 documents some fundamental differences and similarities in a comparison of risk adjustment with complexity stratification versus risk modeling with regression analysis. Complexity stratification, regression analysis, and risk modeling are all forms of risk adjustment; however, regression analysis is true risk modeling, whereas complexity stratification is not. Regression analysis requires making simplifying assumptions to determine the relationship between clinical factors and patient risk. Complexity stratification does not require these assumptions.100 Most regression analyses produce only a single statistic summarizing a hospital’s overall outcome. Both stratification and regression analysis adjust only for risk factors that are explicitly included in the model. Observed differences in outcome might still be explained by factors that were either not measured or not included in the regression analysis or stratification scheme.100

Table 134–3 Two Methods of Case-Mix Adjustment (or Risk Adjustment): Differences between Stratification and Regression Analysis

| Stratification | Regression Analysis |

|---|---|

| A method of analysis in which the data are divided into relatively homogeneous groups (called strata). The data are analyzed in each stratum. | The mortality rate is adjusted for differences in the composition of the patient population at the hospital of interest and the comparison group. It is an estimate of what a given hospital’s mortality rate would be if its case mix was the same as in the comparison group. |

| Compares actual observed mortality rates. | Estimates a hypothetical quantity. |

| Simple, does not require a model. | Usually requires statistical modeling. |

| Results are simple to calculate and interpret. | Interpretation is more complicated. |

| No assumptions | Requires assumptions (such as assuming that a hospital that does well with low-risk cases will do well with high-risk cases); it may be difficult to verify these assumptions. |

| Produces separate estimates for each stratum. Can produce a single summary measure by using direct standardization. | Produces a single summary measure. |

| Can adjust for only a small number of confounder variables. | Useful when there are many confounder variables. |

| Used in the STS Congenital Heart Surgery Database. | Used in the STS Adult Heart Surgery Database. |

STS, Society of Thoracic Surgeons.

Adapted From O’Brien SM, Gauvreau K. Cardiol Young 2008;18(Suppl 2):145-51.

The discrimination of tools for stratification of complexity as predictor of a given outcome such as mortality can be quantified by calculating the area under the receiver operating characteristic curve,133 or C-statistic, as determined by univariable logistic regression. The C-statistic represents the probability that a randomly selected patient, who had the outcome of interest, such as mortality prior to hospital discharge, had a higher predicted risk for the outcome compared with a randomly selected patient who did not experience the outcome. The C-statistic is generally 0.5 to 1.0, with 0.5 representing no discrimination, that is a coin flip, and 1.0 representing perfect discrimination. For example, the model for risk adjustment of the STS Adult Cardiac Surgery Database for predicting 30-day mortality after surgery to place coronary arterial bypass grafts contains 28 clinical variables and has a C-statistic of 0.78.133,134

Risk Adjustment for Congenital Heart Surgery-1

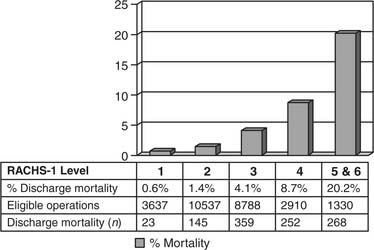

The RACHS-1 method46,54,131,135–137 was developed by a group of investigators under the leadership of Dr. Kathy Jenkins at Children’s Hospital Boston.46 The goal was to adjust for baseline differences in case mix and risk when comparing mortality prior to discharge from the hospital among groups of patients less than 18 years of age undergoing surgery for congenital cardiac disease. A nationally representative 11-member panel of pediatric cardiologists and cardiac surgeons used clinical judgment to place 207 surgical procedures defined by the codes from the Current Procedural Terminology 4 and the International Classification of Diseases, Ninth Revision, Clinical Modification (ICD-9-CM) into six groups judged to have similar risks for in-hospital mortality. These risk categories were then refined using empirical data of mortality prior to discharge from the hospital, from the Pediatric Cardiac Care Consortium and three state-wide hospital discharge databases (Illinois from 1994 and Massachusetts and California from 1995). The final method included six risk categories, with category 1 representing the lowest risk and category 6 the highest, as well as three additional clinical factors: (1) age at operation, with three categories—30 days or less, 31 days to 1 year, and 1 year or more, (2) prematurity, and (3) the presence of a major noncardiac structural anomaly such as a tracheoesophageal fistula.

Although the use of administrative data coded with the ICD-9-CM has sometimes been identified as a potential shortcoming, the developers of the RACHS-1 method point out that these administrative data have, up to now, been used to support a significant fraction of research about health-care services pertinent to pediatric cardiology. Furthermore, algorithms that use these administrative data to examine outcomes of surgery for congenital cardiac diseases have been endorsed by the U.S. Agency for Healthcare Research and Quality.54 It is recognized that administrative data coded with the ICD-9-CM are frequently the only available data that are population based and thus can be a crucial source of information for certain types of research. The RACHS-1 method has shown excellent performance in a variety of settings and has been used extensively in research on clinical outcomes in the United States and abroad.131,136,137

Using several databases, this methodology has been evaluated with respect to the correlation between the level from the RACHS-1 tool and observed discharge mortality. In the administrative hospital discharge database used in the development of the RACHS-1 method, the C-statistic was 0.749 for risk category alone, and 0.814 when additional clinical factors were incorporated. In the clinical database of the Pediatric Cardiac Care Consortium, the C-statistic was 0.784 for risk category alone, and 0.811 with the additional factors.46 Subsequent work in a variety of databases has resulted in C-statistics for the RACHS-1 method ranging from 0.74 to 0.85.

The EACTS and STS congenital databases have included the RACHS-1 system in their database reports since 2006. In the 2006 report of the STS Congenital Heart Surgery Database,78 85.8% of eligible cardiac index operations, or 27,202 out of 31,719 operations, were eligible for analysis by the RACHS-1 system (Fig. 134-5).

The Aristotle Complexity Score

In 1999, under the leadership of Dr. Francois Lacour-Gayet, the Aristotle Committee was created to address the issue of stratification of complexity in surgery for congenital cardiac diseases. The developers of this tool recognized that standard methods of benchmarking in quality of care assessment were based on stratification of risk, with nearly exclusive emphasis on the measurement of the outcome of operative mortality. They believed that to assess outcomes, including comparison of outcomes between centers, and to establish a platform for continuous improvement in quality, stratification based on the risk for mortality alone is insufficient. The fundamental principle of the Aristotle Complexity Score56,57,63,80,84,138–141 is to define complexity as a constant for the challenge presented by a given surgical procedure.56 The Aristotle committee postulated that the complexity of a given procedure in surgery for congenital cardiac diseases is the sum of three factors or indices: (1) the potential for operative mortality, (2) the potential for operative morbidity, and (3) the technical difficulty of the operation.

Lacour-Gayet and the Aristotle Committee have differentiated the concepts of complexity and risk and have stated, “Complexity is a constant precise value for a given patient at a given point in time; performance varies between centers and surgeons. In other words, in the same exact patient with the same exact pathology, complexity is a constant precise value for that given patient at a given point in time. The risk for that patient will vary between centers and surgeons because performance varies between centers and surgeons.”63

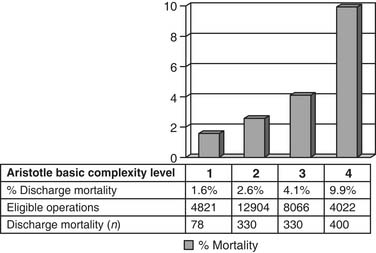

Under the leadership of Lacour-Gayet and the Aristotle Committee, the Aristotle Basic Complexity Score was developed by a panel of experts, made up of 50 surgeons who repair congenital cardiac defects in 23 countries and represent several major professional societies. The Aristotle Basic Complexity Score allocates a basic score to each operation, varying from 1.5 to 15, with 15 being the most complex, based on the primary procedure of a given operation as selected from the Procedure Short List of the version of the IPPCCC derived from the International Congenital Heart Surgery Nomenclature and Database Project of the STS and EACTS. The Aristotle Basic Complexity Score represents the sum or aggregate of scores assigned to a given procedure for the three components of complexity—potential for mortality, potential for morbidity, and technical difficulty—each of which varies from 0.5 to 5. To facilitate analysis across large populations of patients, each procedure is then assigned an Aristotle Basic Complexity Level, which is an integer ranging from 1 to 4 based on the Aristotle Basic Complexity Score (Table 134-4).

Table 134–4 Correlation between Aristotle Basic Complexity Levels and Scores

| Aristotle Basic Complexity Level | Aristotle Basic Complexity Score | Procedures∗ (N) |

|---|---|---|

| 1 | 1.5 to 5.9 | 29 |

| 2 | 6.0 to 7.9 | 46 |

| 3 | 8.0 to 9.9 | 45 |

| 4 | 10.0 to 15.0 | 25 |

∗ Of 145 procedures from the original Procedure Short List of the International Nomenclature used by the Society of Thoracic Surgeons and the European Association for Cardio-Thoracic Surgery.

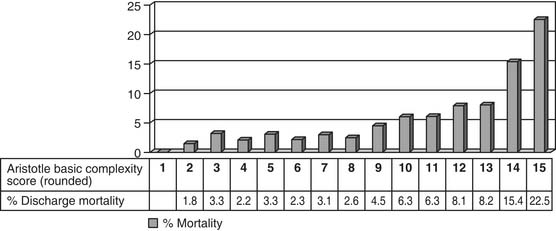

Both the score and the level are useful tools, and the appropriate tool can be chosen to match the required analysis.57,63 Initial data from the STS and EACTS multi-institutional databases indicate that the Aristotle Basic Complexity Score and Aristotle Basic Complexity Level correlate well with mortality prior to discharge from the hospital after surgery for congenital cardiac diseases.63,80,84

In an analysis of data from the STS and EACTS congenital databases, the Aristotle Basic Complexity Score is associated with (1) mortality, with a C-statistic of 0.70, and (2) prolonged postoperative length of hospital stay, defined as postoperative length of stay greater than 21 days, with a C-statistic of 0.67.84 This implies that the Aristotle Basic Complexity Score generally discriminates between low-risk and high-risk operations.84 In this study,84 prolonged postoperative length of hospital stay was regarded as a very general proxy measure of morbidity. Additional concomitant procedures may alter the complexity of an operation. Discrimination was slightly greater when the analysis was restricted to operations consisting of a single procedure, and excluded operations with multiple-component procedures, with a C-statistic of 0.73 for mortality and a C-statistic of 0.70 for prolonged postoperative length of hospital stay. When the mortality and morbidity components of the Aristotle Basic Complexity Score were examined separately, the mortality component predicted mortality with a C-statistic of 0.68, and the morbidity component predicted prolonged postoperative length of hospital stay with a C-statistic of 0.67. Finally, when fixed, hospital-specific intercepts were added to the logistic regression models along with the Aristotle Basic Complexity Score, the C-statistic was 0.74 for mortality and 0.72 for prolonged postoperative length of hospital stay. For comparison, the C-statistics of the models containing hospital effects only, excluding Aristotle Basic Complexity Score, were 0.63 for mortality and 0.62 for prolonged postoperative length of hospital stay. Thus, adding the Aristotle Basic Complexity Score to a model containing hospital effects appears to improve its discrimination.84

The STS and EACTS congenital databases have included the Aristotle Basic Complexity Score and Aristotle Basic Complexity Level in their database reports since 2002. In the 2006 report of the STS Congenital Heart Surgery Database,78 94.0% of eligible cardiac index operations, or 29,813 out of 31,719 operations, were eligible for analysis by the Aristotle Basic Complexity Score and Level (Figs. 134-6 and 134-7).

The Aristotle Comprehensive Complexity Score adds further discrimination to the Basic Score by incorporating two sorts of patient-specific complexity modifiers: (1) procedure-dependent factors, including anatomic factors, associated procedures, and age at procedure, and (2) procedure-independent factors, including general factors such as weight and prematurity, clinical factors such as preoperative sepsis or renal failure, extracardiac factors such as duodenal atresia and imperforate anus, and surgical factors such as reoperative sternotomy. Additional points, up to a maximum of 10, are added to the Basic Score to account for the added complexity and challenge imputed by these modifying factors. The Aristotle Comprehensive Complexity Score has been used by numerous investigators to analyze the outcomes from complex procedures.138–141

STS-EACTS Congenital Heart Surgery Mortality Score and Categories

The newest tool for complexity stratification available for the EACTS and STS Congenital Heart Surgery Databases is the “2008 STS-EACTS Congenital Heart Surgery Mortality Score,” which, when modeled with three patient-level factors (age, weight, and preoperative length of stay) has a C-statistic of 0.816.118 The tool was developed using primarily objective data with minimal use of subjective probability. The risk of mortality prior to discharge from the hospital after cardiac surgery was estimated for 148 types of operative procedures by using actual data from 77,294 patients entered into the Congenital Heart Surgery Databases of the EACTS (33,360 patients) and the STS (43, 934 patients) between 2002 and 2007. Procedure-specific mortality rate estimates were calculated using a Bayesian model that adjusted for small denominators. Each procedure was assigned a numeric score (the 2008 STS-EACTS Congenital Heart Surgery Mortality Score) ranging from 0.1 to 5.0 based on the estimated mortality rate. Procedures were also sorted by increasing risk and grouped into five categories (the 2008 STS-EACTS) Congenital Heart Surgery Mortality Categories) which were chosen to be optimal with respect to minimizing within-category variation and maximizing between-category variation. Model performance was subsequently assessed in an independent validation sample (n = 27,700) and compared with two existing methods: RACHS-1 categories and Aristotle scores (Table 134-5).118

Table 134–5 STS-EACTS Congenital Heart Surgery Mortality Score and Categories

| Method of Modeling Procedures | Model without patient covariates | Model with patient covariates |

|---|---|---|

| 2008 STS-EACTS Congenital Heart Surgery Mortality Score | C = 0.787 | C = 0.816 |

| 2008 STS-EACTS Congenital Heart surgery Mortality Categories | C = 0.778 | C = 0.812 |

| RACHS-I Categories | C = 0.745 | C = 0.802 |

| Aristotle Basic Complexity Score | C = 0.687 | C = 0.795 |

(From: O’Brien SM, Clarke DR, Jacobs JP, Jacobs ML, Lacour-Gayet FG, Pizarro C, Welke KF, Maruszewski B, Tobota Z, Miller WJ, Hamilton L, Peterson ED, Mavroudis C, Edwards FH. An Empirically Based Tool for Analyzing Outcomes of Congenital Heart Surgery. The Journal of Thoracic and Cardiovascular Surgery, accepted for publication, in press.)

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree