Principles of Imaging

James D. Thomas

Overview

Accurate imaging is essential for assessing cardiac anatomy, function, perfusion, and metabolism. Revolutionary advances have been made in the last 20 years primarily because of improvements in computer technology and digital signal processing. The heart can be imaged by using x-rays (radiography, angiography, computed tomography), γ-rays (radionuclide imaging, positron emission technology), sound waves (Doppler echocardiography), and the magnetic properties of the hydrogen nucleus (magnetic resonance imaging). Tests can be compared in terms of their ability to detect and exclude disease (sensitivity and specificity, respectively), but the predictive value of a test depends largely on the prevalence of the disorder in the population being tested (Bayesian analysis). Computer processing is important both in generating images and in enhancing the images for display (smoothing and edge enhancement); Fourier transformation is commonly used to analyze the frequency content of images and data in Doppler echocardiography, magnetic resonance imaging, and radionuclide ventriculography. Digital image storage is becoming feasible with reductions in computer costs and agreement on the Digital Imaging and Communications in Medicine (DICOM) standard for medical image exchange created by the National Electrical Manufacturers Association (NEMA). The massive storage requirements can be reduced by careful clinical editing of studies and with digital compression algorithms. All-digital storage and transmission will greatly enhance the value of cardiac studies and facilitate telemedicine.

Glossary

Convolution

Altering a pixel based on the values of surrounding pixels.

DICOM

Digital Imaging and Communications in Medicine, a formatting standard to that allows the exchange of medical images.

Digital compression

Recording an image to so that it requires less storage. Lossless compression does not change the appearance at all but yields little compression, whereas lossy compression yields greater savings but with some alteration of the image.

Doppler principle

The principle according to which waves, including ultrasound signals, have their frequency shifted in proportion to the velocity of the object emitting them, in the present context, blood velocity.

Fourier analysis

Analysis of the frequency content of an image or signal.

γ-Rays

High-energy photons produced by nuclear decay.

Nuclear magnetic resonance

Spinning or precessing of certain nuclei (typically the hydrogen proton) in the presence of a magnetic field.

Photons

“Particles” of electromagnetic radiation.

Piezoelectric

A property of matter by which electricity is converted into vibration and vice versa.

Point processing

Altering an image gray scale, pixel by pixel.

Positrons

Positive electrons (antimatter); when a positron encounters an electron, both are annihilated and two 511-keV photons are emitted in opposite directions; the process is the physical basis for positron emission tomography.

x-Rays

High-energy photons produced by rearrangement of an atom’s electron cloud.

Introduction

The fine structure and complex motion of the heart demands imaging modalities with higher temporal and spatial resolution than that needed for any other organ. Fortunately, research

over the last 50 years has led to dramatic improvements in our ability to characterize and quantify disorders of the cardiovascular system. The chapters in this section of the book describe the clinical aspects of the major cardiovascular imaging modalities. As a prelude, this chapter describes many of the concepts common to these techniques, including image generation, computer processing, assessment of diagnostic accuracy, and the emerging area of digital storage and transmission. An understanding of the physical background of these methods will help the reader to understand better the clinical applications described in later chapters.

over the last 50 years has led to dramatic improvements in our ability to characterize and quantify disorders of the cardiovascular system. The chapters in this section of the book describe the clinical aspects of the major cardiovascular imaging modalities. As a prelude, this chapter describes many of the concepts common to these techniques, including image generation, computer processing, assessment of diagnostic accuracy, and the emerging area of digital storage and transmission. An understanding of the physical background of these methods will help the reader to understand better the clinical applications described in later chapters.

Here we consider four broad ways to acquire images of the heart: x-ray transmission, radionuclide emission, ultrasonic reflection, and nuclear magnetic resonance. Although these techniques share many features, together they span virtually all of classical and quantum physics. This chapter can only touch on these topics, and the reader is referred to a number of excellent texts for greater detail (1,2,3,4,5,6,7,8,9,10,11,12,13).

What Is Imaging?

In a broad sense, imaging displays the differential interaction of energy with matter to discern structure or function. For instance, radiography exploits the fact that more x-rays are absorbed by bone than by soft tissue, and echocardiography displays the reflection of ultrasonic energy from the border of two tissues with different acoustic impedances. Radionuclide techniques are slightly different in that they deliver an energy source (the radioactive compound) to the body, where it is concentrated in structures of interest and then localized by external detectors. Magnetic resonance imaging defines the differential distribution of weak magnetic characteristics within the body.

Basic Concepts

A number of terms and concepts are common to all imaging modalities.

Resolution

Spatial resolution refers to the smallest separation at which two objects can be distinguished. For example, spatial resolution is measured in radiography with a grid of finely spaced lines and specified as the number of line pairs per centimeter, a relatively constant number across the field of view. Echocardiography typically has greater resolution in the near field than in the far field because of the divergence of the ultrasound beam and is anisotropic, better in the axial direction (along a scan line) than in the lateral direction (across scan lines). One must distinguish the physical resolution of the imaging modality from the resolution of the display. The spacing of the picture elements (pixels) on the screen may be greater or lesser than the physical resolution and is stated as the number of pixels in the horizontal and vertical directions (640 × 480 is typical for echocardiography, 1024 × 1024 for high-resolution digital angiography, and 2048 × 2048 or even higher for digital radiographic and mammographic images). The functional spatial resolution is always the lesser of the physical and screen resolutions.

Temporal resolution refers to the frequency with which an image is generated, usually stated in frames per second. Plain radiographs are typically taken as single images, but temporal resolution may still be relevant because the shutter speed (the time the film is actually exposed to x-rays) determines whether rapid movements (such as prosthetic valve motion) can be “frozen” in the image. There often is a trade-off between temporal and spatial resolution. Echocardiograms can be generated more frequently at the expense of fewer scan lines per image, whereas multigated nuclear scans can be divided into shorter temporal “bins,” but this reduces the amount of data available for each image, reducing the signal-to-noise ratio and spatial resolution. Velocity resolution refers to the smallest difference in velocity that can be discerned.

Dimensionality

The heart can be considered a four-dimensional (4D) structure, possessing three spatial dimensions and one temporal one. Most imaging modalities have two spatial dimensions (i.e., a picture, like a radiograph or an echocardiographic image), but some magnetic resonance, computed tomographic, nuclear, and echocardiographic studies are intrinsically three dimensional, although the display may be on a 2D screen.

Transmission versus Tomographic Imaging

Two-dimensional images of the heart can be generated by either transmission or tomographic techniques. In transmission imaging, the full thickness of the heart is projected onto a screen, whereas a tomogram displays structures lying within a single plane of the heart. For most applications, tomographic imaging (used in magnetic resonance imaging [MRI], computed tomography [CT], echocardiography, and many nuclear tests) is preferable because there is no interference from overlying structures. For angiography, however, transmission imaging is actually an advantage because the full course of the vessel can be visualized, something no single tomographic plane could do. Indeed, in MR and CT angiography, the 3D data set is projected onto a plane to generate a transmission image for easier diagnosis.

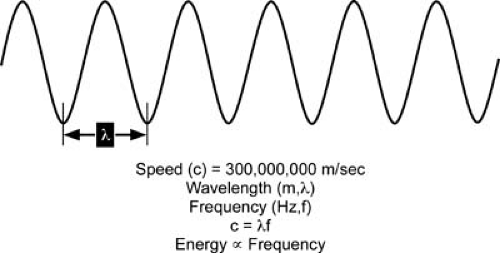

Imaging with Electromagnetic Radiation: x-rays and γ-rays

Although radiography uses x-rays delivered externally to image the body and nuclear imaging uses γ-rays produced inside the body, there actually is no physical difference between x-rays and γ-rays, both being forms of high-energy electromagnetic radiation. By definition, γ-rays are produced by radioactive decay within the atomic nucleus, whereas x-rays are produced by processes in the electron cloud surrounding the nucleus. Electromagnetic radiation can be thought of as a wave with frequency f and wavelength λ, the product of which is equal to the speed of light (299,792 km/sec). It can also be thought of as a stream of discrete massless particles (photons) propagating at the speed of light with an energy proportional to the frequency and usually expressed in units of the electron volt (eV), the energy acquired by an electron when it is accelerated by 1 volt. Figure 46.1 illustrates these concepts, and Table 46.1 shows typical values for a range of electromagnetic radiation. It is important to remember that neither the particle nor the wave nature of electromagnetic radiation is “correct.” Both are correct all of the time, although in practice, one aspect or the other is typically more evident. Indeed, quantum mechanics has even taught us that particles such as the electron can have wavelike properties.

Interaction of x-rays and γ-rays with Matter

Radiography depends on the differential absorption and scattering of photons by various types of tissue. In nuclear imaging, by contrast, nuclide distribution in the body is the primary

determinant, although photon scattering is a major cause of image degradation. For photons in the 50- to 500-keV range (those of principal diagnostic importance), there are two major interactions with matter to consider: the photoelectric effect and Compton scattering. In the photoelectric effect, a photon is completely absorbed by an atom (the desired response), whereas in Compton scattering, the original photon is not completely absorbed, but rather is scattered at a lower energy and at a different angle from the original direction and thus can degrade the image, striking the x-ray film in random locations. This scattering can be partially ameliorated by a placing collimating grid in front of the film to admit only photons traveling in a straight line from the x-ray source (Fig. 46.2), but it is harder to exclude these scattered photons in nuclear imaging.

determinant, although photon scattering is a major cause of image degradation. For photons in the 50- to 500-keV range (those of principal diagnostic importance), there are two major interactions with matter to consider: the photoelectric effect and Compton scattering. In the photoelectric effect, a photon is completely absorbed by an atom (the desired response), whereas in Compton scattering, the original photon is not completely absorbed, but rather is scattered at a lower energy and at a different angle from the original direction and thus can degrade the image, striking the x-ray film in random locations. This scattering can be partially ameliorated by a placing collimating grid in front of the film to admit only photons traveling in a straight line from the x-ray source (Fig. 46.2), but it is harder to exclude these scattered photons in nuclear imaging.

The likelihood of the photoelectric effect (PE) is inversely proportional to the third power of the photon energy E and directly proportional to the fourth power of the atomic number Z (number of protons) of the interacting atom: PE ≈ Z4/E3. For soft tissue (average Z

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree