Chapter 5 Shock, Electrolytes, and Fluid

History

Resuscitation

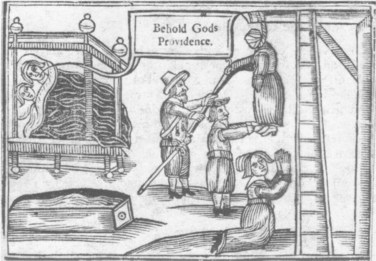

One of the earliest authenticated resuscitations in the medical literature is the miraculous deliverance of Anne Green, who was executed by hanging on December 14, 1650. Green was executed in the customary way by being forced off a ladder to hang by the neck. She hung for 30 minutes, during which time some of her friends pulled “with all their weight upon her legs, sometimes lifting her up, and then pulling her down again with a sudden jerk, thereby the sooner to dispatch her out of her pain”1 (Fig. 5-1). When everyone thought she was dead, the body was taken down, put in a coffin, and carried to the private house of Dr. William Petty—who, by the king’s orders, was allowed to perform autopsies on the bodies of everyone who had been executed.

FIGURE 5-1 Miraculous deliverance of Anne Green, who was executed in 1650.

(From Hughes JT: Miraculous deliverance of Anne Green: an Oxford case of resuscitation in the seventeenth century. Br Med J [Clin Res Ed] 285:1792–1793, 1982; by kind permission of the Bodleian Library, Oxford.)

Shock

Humoral theories persisted until the late 19th century but, in 1830, Herman provided one of the first clear descriptions of intravenous (IV) fluid therapy. In response to a cholera epidemic, he attempted to rehydrate patients by injecting 6 ounces of water into the vein. In 1831, O’Shaughnessy also treated cholera patients by administering large volumes of salt solutions intravenously and published his results in Lancet.2 Those were the first documented attempts to replace and maintain the extracellular internal environment or the intravascular volume. Note, however, that the treatment of cholera and dehydration is not the ideal treatment of hemorrhagic shock.

In 1872, Gross defined shock as “a manifestation of the rude unhinging of the machinery of life.” His definition, given its accuracy and descriptiveness, has been repeatedly quoted in the literature. Theories on the cause of shock persisted through the late 19th century; although it was unexplainable, it was often observed. George Washington Crile investigated it and concluded, at the beginning of his career, that the lowering of the central venous pressure in the shock state in animal experiments was caused by a failure of the autonomic nervous system.3 Surgeons witnessed a marked change in ideas about shock between 1888 and 1918. In the late 1880s, there were no all-encompassing theories, but most surgeons accepted the generalization that shock resulted from a malfunctioning of some part of the nervous system. Such a malfunctioning has now been shown not to be the main reason—but surgeons are still perplexed by the mechanisms of hemorrhagic shock, especially regarding the complete breakdown of the circulatory system that occurs in the later stages of shock.

Crile’s theories evolved as he continued his experimentations; in 1913, he proposed the kinetic system theory. He was interested in thyroid hormone and its response to wounds, but realized that adrenalin was a key component of the response to shock. He relied on experiments by Walter B. Cannon, who found that adrenalin was released in response to pain or emotion, shifting blood from the intestines to the brain and extremities. Adrenalin release also stimulated the liver to convert glycogen to sugar for release into the circulation. Cannon argued that all the actions of adrenalin aided the animal in its effort to defend itself.4

Then, in the 1930s, a unique set of experiments by Blalock5 determined that almost all acute injuries were associated with changes in fluid and electrolyte metabolism. Such changes were primarily the result of reductions in the effective circulating blood volume. Blalock showed that those reductions after injury could be the result of several mechanisms (Box 5-1). He clearly showed that fluid loss in injured tissues involved the loss of extracellular fluid (ECF) that was unavailable to the intravascular space for maintaining circulation. The original concept of a “third space,” in which fluid is sequestered and therefore unavailable to the intravascular space, evolved from Blalock’s studies.

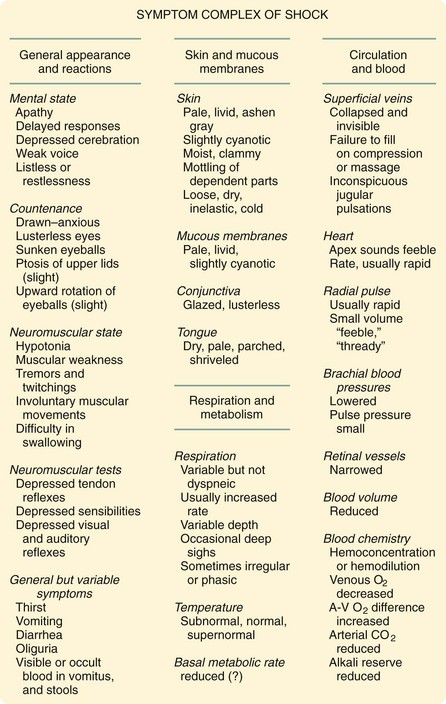

Carl John Wiggers first described the concept of irreversible shock.6 His 1950 textbook, Physiology of Shock, represented the attitudes toward shock at that time. In an exceptionally brilliant summation, Wiggers assembled the various signs and symptoms of shock from various authors in that textbook (Fig. 5-2), along with his own findings. His experiments used what is now known as the Wiggers prep. In his usual experiments, he used previously splenectomized dogs and cannulated their arterial systems. He took advantage of an evolving technology that allowed him to measure the pressure in the arterial system, and he studied the effects of lowering BP through blood withdrawal. After removing the dogs’ blood to an arbitrary set point (typically, 40 mm Hg), he noted that their BP soon spontaneously rose as fluid was spontaneously recruited into the intravascular space.

FIGURE 5-2 Wiggers’ description of symptom complex of shock.

(From Wiggers CJ: Present status of shock problem. Physiol Rev 22:74, 1942.)

Fluids

Normal saline has been used for many years and is extremely beneficial, but we now know that it also can be harmful. Hartog Jakob Hamburger, in his in vitro studies of red cell lysis in 1882, incorrectly suggested that 0.9% saline was the concentration of salt in human blood. This fluid is often referred to as physiologic or normal saline, but it is neither physiologic nor normal. Supposedly, 0.9% normal saline originated during the cholera pandemic that afflicted Europe in 1831, but an examination of the composition of the fluids used by physicians of that era found no resemblance to normal saline. The origin of the concept of normal saline remains unclear.7

In 1831, O’Shaughnessy described his experience in the treatment of cholera8:

Over the next 50 years, many reports cited various recipes to treat cholera, but none resembled 0.9% saline. In 1883, Sydney Ringer reported on the influence exerted by the constituents of the blood on the contractions of the ventricle (Fig. 5-3). Studying hearts cut out of frogs, he used 0.75% saline and a blood mixture made from dried bullocks’ blood.9 In his attempts to identify which aspect of blood caused better results, he found that a “small quantity of white of egg completely obviates the changes occurring with saline solution.” He concluded that the benefit of white of egg was because of the albumin or potassium chloride. To show what worked and what did not, he described endless experiments, with alterations of multiple variables.

FIGURE 5-3 Sydney Ringer, credited for the development of lactated Ringer’s solution.

(From Baskett TF: Sydney Ringer and lactated Ringers’s solution. Resuscitation 58:5–7, 2003.)

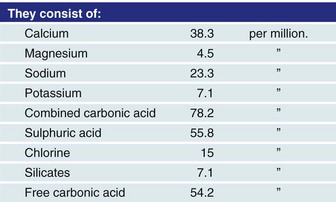

However, Ringer later published another article stating that his previously reported findings could not be repeated; through careful study, he realized that the water used in his first article was actually not distilled water, as reported, but rather tap water from the New River Water Company. It turned out that his laboratory technician, who was paid to distill the water, took shortcuts and used tap water instead. Ringer analyzed the water and found that it contained many trace minerals (Fig. 5-4). Through careful and diligent experimentation, he found that calcium bicarbonate or calcium chloride—in doses even smaller than those in blood—restored good contractions of the frog ventricles. The third component that he found essential to good contractions was sodium bicarbonate. He knew the importance of the trace elements. He also stated that fish could live for weeks unfed in tap water, but would die in distilled water in a few hours; minnows, for example, died in an average of 4.5 hours. Thus, the three ingredients that he found essential were potassium, calcium, and bicarbonate. Ringer’s solution soon became ubiquitous in physiologic laboratory experiments.

FIGURE 5-4 Sidney Ringer’s report of contents in water from the New River Water company.

(From Baskett TF: Sydney Ringer and lactated Ringers’s solution. Resuscitation 58:5–7, 2003.)

Physiology of Shock

Bleeding

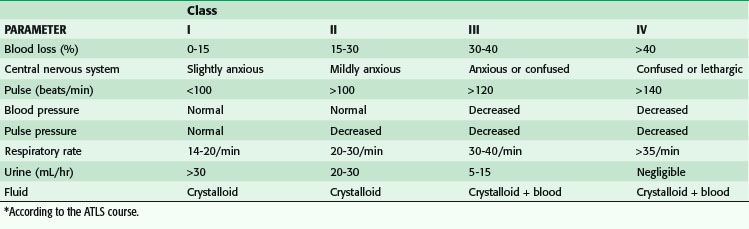

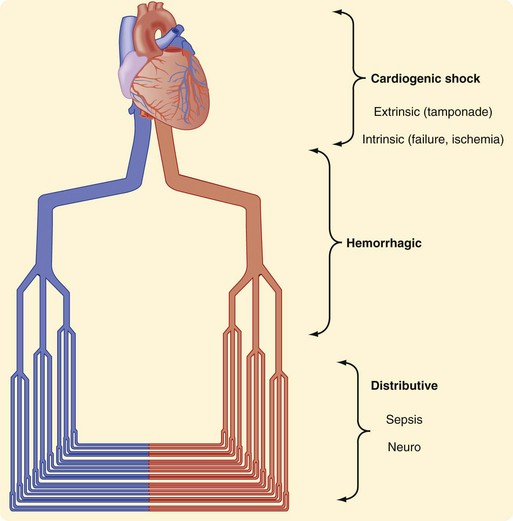

Research and experience have both taught us much about the physiologic responses to bleeding. The Advanced Trauma Life Support (ATLS) course defines four classes of shock (Table 5-1). In general, that categorization has helped point out the physiologic responses to hemorrhagic shock, emphasizing the identification of blood loss and guiding treatment. Shock can be thought of anatomically at three levels (Fig. 5-5). It can be cardiogenic, with extrinsic abnormalities (e.g., tamponade) or intrinsic abnormalities (e.g., pump failure caused by infarct, overall cardiac failure, or contusion). Large vessels can cause shock if they are injured and bleeding results. If the anatomic problem is at the small vessel level, neurogenic dysfunction or sepsis can be the culprit.

One important factor to recognize is that clinical symptoms are relatively few in patients who are in class I shock. The only change in class I shock is anxiety, which is practically impossible to assess—is it the result of factors such as blood loss, pain, trauma, or drugs? A heart rate higher than 100 beats/min has been used as a physical sign of bleeding, but evidence of its significance is minimal. Brasel and colleagues10 have shown that heart rate is neither sensitive nor specific in determining the need for emergent intervention, need for packed red blood cell (PRBC) transfusions in the first 2 hours after an injury, or severity of an injury. Heart rate was not altered by the presence of hypotension (systolic BP <90 mm Hg).

Not until patients are in class III shock does BP supposedly decrease. At this stage, patients have lost 30% to 40% of their blood volume; for an average man weighing 75 kg/168 lbs, that can mean up to 2 liters of blood loss (Fig. 5-6). It is helpful to remember that a can of soda or beer is 355 mL; a six pack is 2130 mL. Theoretically, if a patient is hypotensive from blood loss, we are looking for a six pack of blood. Small amounts of blood should not result in hypotension. Although intracranial bleeding can cause hypotension in the last stages of herniation, it is almost impossible that it is the result of large volumes of blood loss intracranially because there is not enough room for that volume of blood. It is critical to recognize uncontrolled bleeding, and even more critical to stop bleeding before patients go into class III shock. It is more important to recognize blood loss than it is to replace blood loss. A common mistake is to think that trauma patients are often hypotensive; hypotension is rare in trauma patients (occurring less than 6% of the time).

In addition, the ATLS course, which was designed for physicians who are not surgeons, does not recognize many subtle but important aspects of bleeding. The concepts of the course are relatively basic. However, surgeons know that there are nuances of the varied responses to injuries in animals and humans. In the case of arterial hemorrhage, for example, we know that animals do not necessarily manifest tachycardia as their first response when bleeding, but actually become bradycardic. It is speculated that this is a teleologically developed mechanism because a bradycardic response reduces cardiac output and minimizes free uncontrolled exsanguination; however, a bradycardic response to bleeding is not consistently shown in all animals, including humans. Some evidence has shown that this response, termed relative bradycardia, does occur in humans. Relative bradycardia is defined as a heart rate lower than 100 beats/min when the systolic BP is less than 90 mm Hg. When bleeding patients have relative bradycardia, their mortality rate is lower. Interestingly, up to 44% of hypotensive patients have relative bradycardia. However, patients with a heart rate lower than 60 beats/min are usually moribund. Bleeding patients with a heart rate of 60 to 90 beats/min have the higher survival rate as compared with patients who are tachycardic (heart rate >90 beats/min).11

It is generally taught that the hematocrit or hemoglobin level is not reliable for predicting blood loss. This is true for patients with a high hematocrit or hemoglobin level but in patients resuscitated with fluids, a rapid drop in the hematocrit and hemoglobin levels can occur immediately. Bruns and associates12 have shown that the hemoglobin level can be low within the first 30 minutes after the patient arrives at a trauma center. Therefore, although patients with a high or normal hemoglobin level may have significant bleeding, a low hemoglobin level, because it occurs rapidly, usually reflects the actual hemoglobin level and extent of blood loss. Infusion of acellular fluids often will dilute the blood and decrease the hemoglobin levels even further.

The lack of good indicators to distinguish which patients are bleeding has led many investigators to examine heart rate variability or complexity as a potential new vital sign. Many clinical studies have shown that heart rate variability or complexity is associated with poor outcome, but this has yet to catch on, perhaps because of the difficulty of calculating it. Heart rate variability or complexity would have to be calculated using software, with a resulting index on which clinicians would have to rely; this information would not be available merely by examining patients. Another issue with heart rate variability or complexity is that the exact physiologic mechanism for its association with poor outcome has yet to be elucidated.13 This new vital sign may be programmable into currently used monitors, but its usefulness has yet to be confirmed.

Hypotension has been traditionally set, arbitrarily, at 90 mm Hg and below. However, Eastridge and coworkers14 have suggested that hypotension be redefined as 110 mm Hg and below, because that BP is more predictive of death and hypoperfusion. They concluded that 110 mm Hg would be a more clinically relevant cutoff point for hypotension and hypoperfusion. In 2008, Bruns and colleagues15 confirmed that concept, showing that a prehospital BP lower than 110 mm Hg was associated with a sharp increase in mortality, and 15% of patients with that BP would eventually die in the hospital. As a result, they recommended redefining prehospital triage systems. Of note, especially in older patients, normal vital signs may miss occult hypoperfusion as indicated by increased lactate levels and base deficit.16

Lactate and Base Deficit

Lactate has been a marker of injury, and possibly ischemia, and has stood the test of time.16 However, new data question the cause and role of lactate. Emerging information is confusing; it suggests that we may not understand lactate for what it truly implies. Lactate has long been thought to be a byproduct of anaerobic metabolism and is routinely perceived to be an end waste product that is completely unfavorable. Physiologists are now questioning this paradigm and have found that lactate behaves more advantageously than not. An analogy would be that firefighters are associated with fires, but that does not mean that firefighters are bad, nor does it mean that they caused the fires.

In canine muscle, lactate is produced by moderate-intensity exercise when the oxygen supply is ample. A high adrenergic stimulus also causes a rise in lactate level as the body prepares or responds to stress. A study of climbers of Mount Everest has shown that the resting PO2 on the summit was approximately 28 mm Hg and decreased even more during exercise.17 The blood lactate level in those climbers was essentially the same as at sea level. These studies have allowed us to question lactate and its true role.

In humans, lactate may be the preferred fuel in the brain and heart; infused lactate is used before glucose at rest and during exercise. Because it is glucose sparing, lactate allows glucose and glycogen levels to be maintained. However, some data point to lactate’s protective role in TBIs.18 Lactate fuels the human brain during exercise. The level of lactate, whether it is a waste product or source of energy, seems to signify tissue distress, from anaerobic conditions or other factors.19 Release of epinephrine and other catecholamines will result in higher lactate levels.

One of the problems with base deficit is that it is commonly influenced by the chloride from various resuscitation fluids, resulting in a hyperchloremic nongap acidosis. In patients with renal failure, base deficit can also be a poor predictor of outcome. In the acute stage of renal failure, a base deficit lower than 6 mmol/liter is associated with a poor outcome.20 With the use of hypertonic saline (HTS), which has three to eight times the sodium chloride concentration as normal saline, depending on the concentration used, in trauma patients, the hyperchloremic acidosis has been shown to be relatively harmless. However, when HTS is used, the base deficit should be interpreted with caution.

Compensatory Mechanisms

Then, the juxtaglomerular apparatus in the kidney—in response to the vasoconstriction and decrease in blood flow—produces the enzyme renin, which generates angiotensin I. The angiotensin-converting enzyme located on the endothelial cells of the pulmonary arteries converts angiotensin I to angiotensin II. In turn, angiotensin II stimulates an increased sympathetic drive, at the level of the nerve terminal, by releasing hormones from the adrenal medulla. In response, the adrenal medulla affects intravascular volume during shock by secreting catechol hormones—epinephrine, norepinephrine, and dopamine— which are all produced from phenylalanine and tyrosine. They are called catecholamines because they contain a catechol group derived from the amino acid tyrosine. The release of catecholamines is thought to be responsible for the elevated glucose level in hemorrhagic shock. Although the role of glucose elevation in hemorrhagic shock is not fully understood, it does not seem to affect outcome.21

Lethal Triad

Hypothermia

Medical or accidental hypothermia is also very different from trauma-associated hypothermia (Table 5-2). The survival rates after accidental hypothermia range from approximately 12% to 39%; the average temperature drop is to approximately 30° C (range, 13.7° to 35.0° C). The lowest recorded temperature in a survivor of accidental hypothermia (13.7° C [56.7° F]) was in an extreme skier in Norway; she was trapped under the ice and eventually fully recovered neurologically.

Table 5-2 Classification of Hypothermia by Cause

| Cause | ||

|---|---|---|

| DEGREE | TRAUMA | ACCIDENT |

| Mild | 36°-34° C | 35°-32° C |

| Moderate | 34°-32° C | 32°-28° C |

| Severe | <32° C (<90° F) | <28° C (<82° F) |

The data in patients with trauma-associated hypothermia differ. Their survival rate falls dramatically with their core temperature, reaching 100% mortality when it reaches 32° C at any point—whether in the emergency room, operating room, or intensive care unit (ICU). In trauma patients, hypothermia is caused by shock and is thought to perpetuate uncontrolled bleeding because of the associated coagulopathy. Trauma patients with a postoperative core temperature lower than 35° C have a fourfold increase in mortality and lower than 33° C, a sevenfold increase in mortality. Hypothermic trauma patients tend to be more severely injured and older, with bleeding as indicated by blood loss and transfusions.22

Surprisingly, in a study using the National Trauma Data Bank, Shafi and associates have shown that hypothermia and its associated poor outcome are not related to the state of shock. It was previously thought that a core temperature lower than 32°C was uniformly fatal in trauma patients who have the additional insult of tissue injury and bleeding. However, a small number of trauma patients have now survived, despite a recorded core temperature lower than 32° C. In a multi-institutional trial, Beilman and coworkers23 have recently demonstrated that hypothermia is associated with more severe injuries, bleeding, and a higher rate of multiorgan dysfunction in the ICU, but not with death.

Rewarming techniques are classified as passive or active. Active warming is further classified as external or internal (Table 5-3). Passive warming involves preventing heat loss. An example of passive warming is to dry the patient to minimize evaporative cooling, giving warm fluids to prevent cooling, or covering the patient so that the ambient air temperature immediately around the patient can be higher than the room temperature. Covering the patient’s head helps reduce a tremendous amount of heat loss. Using aluminum-lined head covers is preferred; they reflect back the infrared radiation that is normally lost through the scalp. Warming the room technically helps reduce the heat loss gradient, but the surgical staff usually cannot work in a humidified room at 37° C. Passive warming also includes closing open body cavities, such as the chest or abdomen, to prevent evaporative heat loss. The most important way to prevent heat loss is to treat hemorrhagic shock by controlling bleeding. Once shock has been treated, the body’s metabolism will heat the patient from his or her core. This point cannot be overemphasized.

Table 5-3 Classification of Warming Techniques

| Active | ||

|---|---|---|

| PASSIVE | EXTERNAL | INTERNAL |

| Drying the patient | Bair Hugger | Warmed fluids |

| Warm fluids | Heated warmers | Heat ventilator |

| Warm blankets, sheets | Lamps | Cavity lavage, chest tube, abdomen, bladder |

| Head covers | Radiant warmers | Continuous arterial or venous rewarming |

| Warming the room | Clinitron bed | Full or partial bypass |

The best method to warm patients is to deliver the calories internally (Table 5-4). Heating the air used for ventilators is technically internal active warming, but is inefficient because, again, the heat transfer method is convection. The surface area of the lungs is massive, but the energy is mainly transferred through humidified water droplets, mostly using convection and not conduction. The amount of heat transferred through warmed humidified air is also minimal by comparison to methods that use conduction. Body cavities can be lavaged by infusing warmed fluids through chest tubes or merely by irrigating the abdominal cavity with hot fluids. Other methods, which have been written about but rarely used in practice, include gastric lavage and esophageal lavage with special tubes. If gastric lavage is desired, one method is to place two nasogastric tubes and infuse warm fluids in one while the other sucks the fluid back out. Bladder irrigation with an irrigation Foley catheter is useful. Instruments to warm the hand through conduction show much promise but are not yet readily available.

Table 5-4 Calories Delivered by Active Warming

| METHOD | kcal/hr |

|---|---|

| Airway from vent | 9 |

| Overhead radiant warmers | 17 |

| Heating blankets | 20 |

| Convective warmers | 15-26 |

| Body cavity lavages | 35 |

| CAVR | 92-140 |

| Cardiopulmonary bypass | 710 |

CAVR, Continuous arteriovenous rewarming.

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree