Safety and Quality in the Heart Center

Anthony Y. Lee

Tom Taghon

Richard McClead

Wallace Crandall

Terrance Davis

Richard J. Brilli

Introduction

Much Quality, Safety, and Continuous Quality Improvement (QI) science comes from fields other than medicine such as nuclear power and commercial aviation. As a result some quality-related terminology may not be familiar to the pediatric cardiologist or they may not be familiar with the specific definitions. To avoid confusion and put some terms in the clinical context of the Heart Center, the following is a brief definition list:

Quality: The degree to which health services for individuals and populations increase the likelihood of desired health outcomes and are consistent with current professional knowledge (1). Thus, quality is about outcomes: how successful are we in treating a certain cardiac defect? How accurately can we diagnose it?

Patient safety: Freedom from accidental injury (2), or avoidance, prevention, and amelioration of adverse outcomes or injuries from healthcare processes (3). This is different from employee safety only in viewpoint. The practices which maximize patient safety will also maximize employee safety.

Adverse event: An injury which is caused by medical management, not the patient’s disease (2). Not all adverse events are preventable. Not all untoward outcomes are caused by adverse events. An untoward outcome without medical mismanagement may not be preventable.

Medical error: An event where a planned action is not carried out or carried out incorrectly—an “error of execution;” or an event occurring secondary to a faulty plan—an “error of planning” (2,4). James Reason (4) further dissects the anatomy of medical errors into slips, lapses, and fumbles. A slip is when an individual does something they are not supposed to do, and a lapse is when they don’t do something they are supposed to do. A fumble is when a normally well-executed task is simply bungled.

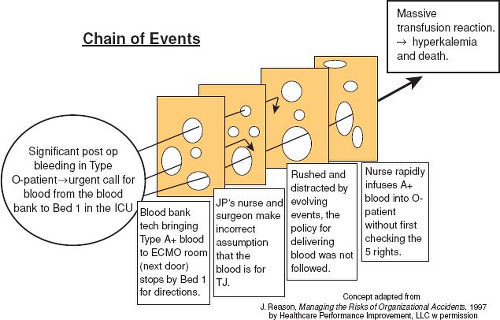

Swiss cheese phenomenon: Another concept popularized by Reason (4) is that most significant adverse events do not result from a single medical error. Instead they result from multiple failures of the barriers (usually policies and procedures) which were intended to protect the patient. Each barrier is represented as a slice of cheese. The barriers are not perfect and the holes in the Swiss cheese represent areas where the barrier could be breached. For an error to reach the patient and cause harm, all the holes in the various Swiss cheese layers must line up.

QI work requires a change in mindset for many clinician scientists. QI in health care is characterized by systematic, data-guided activities designed to bring about immediate positive changes. QI tools use deliberate actions guided by data which reflects those actions’ effects. QI is an overarching ongoing process to improve healthcare delivery outcomes and as such is different from more traditional research which is aimed at answering a specific question over a discrete time (5). Further, most clinician scientists are trained in the traditional research model (randomized trials with treatment groups and control groups) wherein an intervention is introduced while controlling for all or most other variables and then examining the result. Often a comparative group is monitored which did not receive the intervention. QI work, on the other hand, seeks to monitor the system in real-time, introduce interventions, and monitor for how the system responds and then introduce further interventions until a desired and measureable outcome is achieved. QI does not attempt to control system variables as interventions are introduced. Understanding complex system interactions, rather than controlling them distinguishes QI from intervention-based research trials.

This chapter is intended to provide the cardiology specialist with an overview of quality- and safety-related principles. We have chosen to use a clinical case scenario that caused significant harm to a patient to illustrate QI concepts and tools. The case is based upon a cardiac ICU–related medical error but could have happened in the pediatric ICU or even on a general care unit.

I. Case Study

JP is a 5-day-old boy with hypoplastic left heart syndrome (mitral atresia/aortic atresia), transferred to the cardiac intensive care unit (CICU) from a referring hospital. He undergoes his first stage palliation on day of life 5, with a traditional Norwood procedure and a modified Blalock–Thomas–Taussig shunt. The surgery was routine, however upon arrival to the CICU, his chest tube output was high. JP’s hemoglobin dropped from 13 to 8 g/dL over the first 4 hours and his serum lactate increased to 5.5 mmol/dL. The attending physician orders 20 mL/kg of packed red blood cells along with platelets and fresh frozen plasma for this blood type O negative patient.

Meanwhile, another patient in the CICU who underwent surgical repair of her tetralogy of Fallot on the same day also required a transfusion for ongoing chest tube output. The critical care fellow orders 20 mL/kg of packed red blood cells (A positive) and platelets for this patient.

Since JP’s bleeding was not decreasing, preparations were made to take him back to the operating room for surgical exploration. The CICU attending physician calls the blood bank and asks the blood be delivered “STAT.” Shortly afterward, a blood bank technician who was unfamiliar with the unit’s physical layout, arrives with the blood intended for the patient with tetralogy of Fallot. JP’s nurse saw the blood bank technician and assumed the blood was that which had been ordered for JP and takes the blood from the technician. With JP becoming hypotensive, the surgeon decides to explore his chest in the CICU rather than returning to the operating room.

During this time JP’s parents walked into the CICU and were alarmed at the commotion at JP’s bedside. They asked for an update on his condition but were asked to return to the waiting room. The surgeon asks the nurse to give additional blood quickly. As a result, the wrong blood was given by rapid IV push. Within several minutes, JP’s urine becomes red in color with worsening bleeding and hypotension. The blood was identified as the A positive blood intended for the other CICU patient and an acute hemolytic transfusion reaction was identified. JP received steroids and mannitol. Eventually the bleeding source was found and repaired, but JP continued to have diffuse bleeding.

Once the surgeon had finished the procedure, JP’s parents were allowed back to his bedside. They expressed frustration at not knowing what was happening. Although the CICU fellow updated the parents, they were still confused about what occurred and had many questions about JP’s condition. Over the next 2 hours, JP’s potassium rose and he became anuric. Despite aggressive medical therapy, JP suffered a cardiac arrest and could not be resuscitated.

A serious safety event was declared and a root cause analysis (RCA) was carried out.

II. Root Cause Analysis—A Road to Resolution: What Happened and Why

JP’s case: The wrong blood was given to JP. A subsequent cause analysis revealed: (1) the perceived urgency to administer the blood was used by the nurse as a reason to “skip” the double check that should occur prior to all blood product administration (an “individual failure”); and (2) the hospital and blood bank did not have a clear and well-known double-check policy (with consequences for policy violation) prior to all medication and blood product administration (a “system failure”).

RCA is a framework used in industry and recently applied to health care that is utilized retrospectively to determine system and individual adverse event causes. In 1996, the Joint Commission mandated that an RCA be done on all reportable sentinel events including “mismatched blood administration with transfusion reaction,” yet the literature to date supporting RCA effectiveness is limited. In 1998, the Veteran’s Administration (VA) National Center for Patient Safety was established. Bagian et al. transformed the VA cause analysis system by implementing a front line staff-driven process that emphasized searching for system vulnerabilities with actionable solutions and de-emphasizing the less actionable human error root causes (6). Recently, Percarpio et al. examined the evidence that RCA improved patient safety (7). From among 38 references, 11 case studies used clinical or process outcome measures to assess RCA effectiveness, described corrective actions, and outlined improved clinical outcomes subsequent to the RCA. Examples of improved outcomes included reduced mortality following various surgical procedures, reduced patient falls, and improved liver transplant graft survival. Importantly, no pediatric studies were reported.

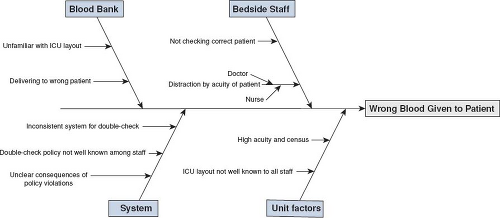

There are multiple templates used to complete an RCA, with all involving an event description with a timeline, causal event identification, and suggested corrective actions (7,8). Causal factors are often broken into subcategories such as patient factors, caregiver factors, team factors, and technology or environmental factors (8). Asking the question, “why?” five times has been used to help identify all the contributing factors associated with an adverse event (9). The responses obtained to these “why” questions are used to help create a cause and effect diagram. This diagram or “fishbone diagram” can also be used to help map the process and better categorize root causes. The main factor categories contributing to the event are listed on the various “fishbone branches or ribs.” Within each major branch, smaller branches may be added describing deeper issues within each category (Fig. 79.1). Percarpio and colleagues also include “measures to track improvement” and “baseline with follow-up data” to the aforementioned RCA framework.

There are several potential pitfalls associated with how most RCA work is completed. Many resources are required by front line staff and hospital leaders with limited evidence that the RCA process improves patient safety or quality. Typical problems related to implementation include: (1) narrow scope (limiting the findings to only the unit in question rather than generalizing to other hospital areas); (2) unreliable implementation of the identified corrective actions and follow-up to ensure the corrective actions are sustained; (3) too much focus on the individual human error (punitive culture) and less on the larger system that enabled the individual to fail; and (4) hindsight bias by the RCA team because the RCA, by definition, is conducted after the event has occurred.

In contrast to the retrospective RCA approach, the Healthcare Failure Modes and Effects Analysis (HFMEA) method utilizes a prospective approach to adverse event analysis. By anticipating where problems may occur or proactively analyzing high-risk processes before they are implemented, hospitals may use the HFMEA process to prevent adverse events, rather than reacting to an event that has already occurred. A commonly used HFMEA template was designed by the VA National Center for Patient Safety (NCPS) and involves five steps to assess a healthcare process: (1) identifying the topic; (2) multidisciplinary team formation; (3) creating a graphical process description; (4) performing a hazard analysis; and (5) developing actions and identifying outcome measures (10). The hazard analysis (step 4) is an essential part of the HFMEA process and uses a mathematical scoring system to prioritize risk and potential errors (failure modes) in the process being evaluated. Scoring takes

into account the probability of failure actually happening as well as the consequences if it did occur. Failure modes with high scores get prioritized to develop a mitigation plan and action plan to be followed if the failure happens. Recently Ashley and Armitage (11) have questioned the reliability of the mathematical scoring systems in use today which result in very different prioritization recommendations for the failure modes. They suggest that a consensus scoring system should be developed to mitigate this possibility.

into account the probability of failure actually happening as well as the consequences if it did occur. Failure modes with high scores get prioritized to develop a mitigation plan and action plan to be followed if the failure happens. Recently Ashley and Armitage (11) have questioned the reliability of the mathematical scoring systems in use today which result in very different prioritization recommendations for the failure modes. They suggest that a consensus scoring system should be developed to mitigate this possibility.

Our organization and other US pediatric hospitals have collaborated with Healthcare Performance Improvement, LLC.© (HPI, Virginia Beach, VA) to implement a robust RCA process as part of a larger strategy to become a highly reliable organization, thus improving safety and quality outcomes. RCAs are triggered by serious harm events, near-miss harm events, or Joint Commission “sentinel events.” Joint Commission sentinel events are “an unexpected occurrence involving death or serious physical or psychological injury, or the risk thereof” (12). Sentinel events are identified by the outcome without consideration for preventability or whether there was a variation from expected care practices that caused the event. In contrast, a serious harm event starts with a deviation from best practice that results in serious harm. Therefore a serious harm event includes both the causal process and the untoward outcome.

The RCA model we are using involves a careful timeline reconstruction describing the action sequence preceding and following the harm event. The timeline description requires interviews with all staff involved in the event along with a review of pertinent policies and procedures. Inappropriate actions are identified when there is deviation from expected practice or local or national policies/guidelines. The timeline and possible inappropriate actions are discussed by an RCA team which includes individuals who are not involved in the harm event, but who understand the patient care processes that failed. The RCA team is led by an Executive Sponsor whose role is to help the RCA team when or if they reach system barriers that require executive interventions. Root causes, once identified, are discussed by the RCA team and corrective actions are codified. The root causes are categorized into system or individual failure modes. There are multiple subcategories within the larger system or individual failure groupings. This subcategorization is intended to make it easier to find common causes for adverse events, even if the various event specifics are disparate. Individuals who possess the authority to implement the corrective actions are identified, and a timeline for implementation is established. An expected completion date is recorded and follow-up by QI staff takes place to ensure that the corrective actions have been implemented and are sustained.

JP’s case: JP received the wrong blood. System failures included the following: The hospital did not have a clear, consistent method and policy for double-checking blood products. Additionally, the expectations and potential consequences of violating a patient check were not clearly understood by all staff members. Individual failures include: The nurse did not perform a double check prior to blood administration to ensure the blood was intended for her patient although she knew the double check was necessary. The blood bank technician did not confirm delivering the blood product to the correct patient bed. System corrective measures include: Establish a hospital-wide policy regarding how blood products are ordered and delivered to patients. Require a mandatory double check of blood products (as well as high-risk medications) by all staff members. Provide education to staff members regarding this policy as well as providing background to why it was established. Individual corrective measures include: Provide coaching to the nurse involved as she chose to take an unacceptable risk, but has no prior history of safety issues. In addition, supplemental education and increased supervision for this nurse and blood bank technician should be provided.

When Individuals Fail

There are several individual failures that may have contributed to the preventable harm suffered by JP. The care provider likely realized that double checking the blood transfusion was required. However, because the environment in the intensive care unit was hectic and the patient was unstable and deteriorating rapidly, this important step was omitted. Health care often promotes a “culture of blame” when individuals fail. “Who messed up?” is frequently the first question when patient harm occurs. Individuals are blamed for errors when hospital leadership fails to recognize how a “flawed” system can impact individual performance. How was the system designed to “help the individual fail?” The “blame culture” leads to decreased error reporting and further impairs the ability of leaders and frontline staff to identify and fix system-related issues. Nevertheless, it is easier to blame someone and resort to familiar solutions such as “counseling, disciplinary action, enforcing rules, or developing new rules” rather than finding the root cause of systemic problems. Reason’s “Swiss Cheese Model” outlines how systems have flaws (holes in the cheese) that when lined up appropriately, will make it easier for the individual failure (an error) to reach the patient and ultimately lead to patient harm (Fig. 79.2) (13). Providers and patients become the victims of systems that are

inadequately designed to prevent or reduce human error. Punishing the individual will not reduce the error rate. In fact, in a punitive environment staff members are less likely to report errors or near-miss errors. James Reason has said, “when an individual forgets (a slip), there is little value in “putting a carcass on the wall” to demonstrate that the problem is fixed” (14).

inadequately designed to prevent or reduce human error. Punishing the individual will not reduce the error rate. In fact, in a punitive environment staff members are less likely to report errors or near-miss errors. James Reason has said, “when an individual forgets (a slip), there is little value in “putting a carcass on the wall” to demonstrate that the problem is fixed” (14).

Figure 79.3 Accountability grid—just culture. (Concept adapted from Reason J. Managing the Risks of Organizational Accidents, 1997 by Healthcare Performance Improvement, LLC © with permission.) |

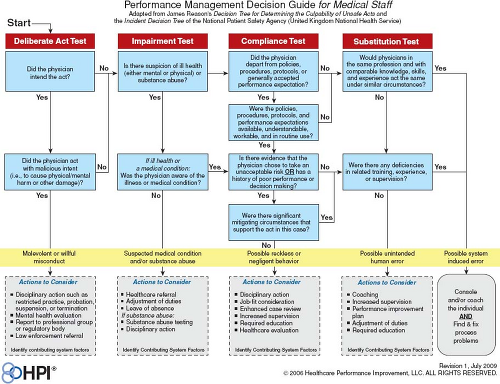

Nevertheless, sometimes individuals who make mistakes leading to patient harm should be held accountable for their individual failures. A healthy safety environment is one that balances the “blame-free culture” with fairness regarding personal accountability. The term “just culture” has been used to describe this cultural balance (15,16). A “just culture” environment is not totally blame-free, but rather a culture in which the process for evaluating errors is clear, transparent, and carefully separates blame-worthy from blame-less acts. In this case scenario, blaming the individual and removing the employee likely will not prevent a similar episode from occurring but by another employee. The system must be constructed to make it easier for employees working in the high stress ICU environment to stop and think prior to proceeding with a safety critical act (e.g., double checking patient records prior to blood administration). Since “just culture” does not imply “blame-free,” determining culpability is essential. Reason provides an algorithm for assessing individual culpability associated with unsafe acts which is consistent with a just culture (17). Through a series of questions, leaders can assess individual culpability: Were the actions and consequences intended? Is there a medical condition (e.g., substance abuse or chronic illness) involved? Did the individual knowingly violate a safe operating procedure that was readily available to, and understandable by the individual? Would others in the same circumstances do the same thing? Does this person have a history of unsafe acts? Based on the answers to these questions, diminishing culpability levels can be assigned—ranging from criminal negligence to blameless error (Fig. 79.3).

III. The Improvement Team—Aim, Key Drivers, and Interventions Developed by the Team

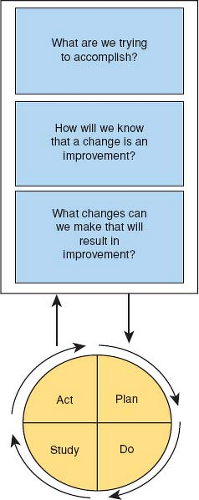

Multiple methodologies have been described to fix failing systems, including the Model for Improvement, Six-Sigma DMAIC, Lean Improvement, and the Seven-Step problem-solving model (18). These methodologies have many similarities and use common tools to implement change. One widely used methodology in health care is the Model for Improvement. This method “attempts to balance the rewards from taking action with the wisdom of careful study

before taking action” (18). The Model for improvement starts with three questions (Fig. 79.4):

before taking action” (18). The Model for improvement starts with three questions (Fig. 79.4):

“What are we trying to accomplish?” Define your improvement project in a specific aim that states what you intend to improve, by how much, by when, in what population, and for how long. The specific aim should be concise, measureable, and achievable. This is most likely to happen when your specific aim includes SMART elements. That is, it should be Specific, Measurable, Attainable, Relevant, and Timely (see below).

“How will you know that a change is an improvement?” If the system that requires improvement is relatively simple, the improvement should be obvious. That may not be the case for more complex systems. Choosing the right metric(s) is critical to the improvements project’s success.

“What changes can be made that will result in improvement?” Ideas for improvement can come from multiple sources such as peer reviewed literature, detailed analysis of internal data, or best practices used by other organizations or industries. Often, improvement ideas can be generated by QI teams through group process activities such as brainstorming, nominal group technique, process mapping, the fishbone diagram development, or using tally sheets.

Through an iterative Plan-Do-Study-Act process, improvement ideas become “tests of change” that are developed (Plan), implemented (Do), monitored (Study), and interpreted (Act). Plan: Each “test of change” is based on a prediction that improvement will occur. Each PDSA cycle tests that prediction and learning is acquired. Other factors that should be considered in determining the first PDSA size include: (a) system readiness for change; (b) the probability the proposed system change will work; and (c) the consequences to the system if the proposed system change fails. The less ready the system, the lower the probability the change will work, and the greater the risk if the change fails—then the smaller the first PDSA should be. Do: Carry out the plan on a limited scale see what happens. Study: study how the “test of change” affects the process in question. The data derived from each “test of change” generate new knowledge or learning and influence the next test of change. Act: After interpreting the first cycle’s results, then determine next steps. It is this learning that fuels the action that initiates the next PDSA cycle. The PDSA process is repeated incorporating the learning from previous cycles. Because all improvement involves change, but not all change is improvement, PDSAs should usually start on a small scale with just a few patients or staff. Some improvement ideas are not successful, and must be abandoned. As confidence in the “test of change” improves with each cycle, the PDSA cycle size can be “scaled up involving more staff or patients” until the new process becomes the norm.

Specific Aim and Key Driver Diagram

The aim and key drivers diagram is a tool used to organize the quality team’s improvement theories. It is intended to focus and target the team’s work. In JP’s case, a team was charged with creating a reliable process to guarantee the use of a “double check” prior to blood product administration. They organized their team by developing a well-defined aim statement, key drivers and interventions necessary to reach the stated aim.

S.M.A.R.T. aims must be specific. What is the goal? What is to be achieved? Importantly, an improvement aim must be designed to improve only one thing. S.M.A.R.T aim statements are measureable. An increase or decrease in the measure will be directly associated with the desired change in the process being changed. S.M.A.R.T aims are actionable. The team empowered to improve a failed process must be able to impact the process and overcome barriers to improvement. S.M.A.R.T. aims are realistic in that there must be a reasonable expectation that the aim can be achieved. A realistic aim statement often requires that it be “bounded” or narrowly focused. The team may limit their improvement work to a single unit, patient care service, or a defined patient population. By doing so, the team can test changes on a small scale prior to spreading the improvement ideas to other areas or patient populations. Finally, an S.M.A.R.T. aim must be timely. The improvement aim must have a target date by which it will be completed. If that date is more than 6 months from the project’s initiation, interim milestones should be set. While all S.M.A.R.T. aim elements are important, the two that often lack clarity are measurability and timeliness. Poorly constructed aim statements address improving failed processes, but do not define how much improvement is expected and by when the improvement is expected. During his 2004 Institute for Healthcare Improvement (IHI) Key Note address, Don Berwick, former President and CEO of the IHI stated this concept well, “Some is not a number, soon is not a time.”

JP’s case: A team was codified to examine and put in place reliable processes to prevent a similar occurrence from happening. Specifically,

the process had to ensure that “double checks” were consistently done prior to any blood product administration. The Team developed the following S.M.A.R.T. aim statement (Fig. 79.5): To improve “double-check” process compliance for blood administration in the CICU from 50% to 95% within the next 90 days. This aim statement presumes that the process for a correct “double check” is already known and that a 50% baseline compliance rate had been established through a preliminary audit in the intensive care unit.

the process had to ensure that “double checks” were consistently done prior to any blood product administration. The Team developed the following S.M.A.R.T. aim statement (Fig. 79.5): To improve “double-check” process compliance for blood administration in the CICU from 50% to 95% within the next 90 days. This aim statement presumes that the process for a correct “double check” is already known and that a 50% baseline compliance rate had been established through a preliminary audit in the intensive care unit.

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree