Studies have suggested that operator proficiency has a substantial effect on complication rates and procedural outcomes. Endovascular simulators have been used for training and have been proposed as an alternative to the conventional assessment of skills. The present study sought to validate simulation as an objective method for proficiency evaluation in carotid artery stenting. Interventional cardiologists classified as novice, intermediate, or experienced practitioners performed 3 simulated, interactive carotid stenting cases on an AngioMentor endovascular simulator. An automated algorithm scored the participants according to the technical performance, medical management, and angiographic results. A total of 33 interventional cardiologists (8 novices, 15 intermediates, and 10 experts) completed 82 simulated procedures. The composite scores for the case simulations varied significantly by operator experience, with better scores for the more experienced groups (p <0.0001). The metrics that discriminated between operator experience groups included fluoroscopy time, crossing the carotid lesion with devices other than a 0.014-in. wire before filter deployment, and incomplete coverage of the lesion by the stent. In conclusion, the results of the present study validate that a simulator with an automated scoring system is able to discriminate between levels of operator proficiency for carotid artery stenting. Simulator-based performance assessment could have a role in initial and ongoing proficiency evaluations and credentialing of interventional operators of high-risk endovascular procedures.

Carotid artery stenting (CAS) with an embolic protection device is a relatively new endovascular alternative to endarterectomy and is prototypical of advances in devices, procedural complexity, and intolerance of even small errors, with a periprocedural rate of stroke or death of 2% to 6%. Studies have suggested that operator volume-based proficiency has a substantial effect on complication rates and procedural outcomes. The concerns expressed regarding errors and costs in healthcare have highlighted the challenges in the realm of operator training, performance assessment, and credentialing for new, high-risk endovascular techniques such as CAS. Recent CAS clinical trials mandated rigorous training programs for participating interventionalists, and clinical competence metrics have been proposed for national credentialing. Endovascular simulators have been proposed to improve operator skills before approaching human patients, and it has been shown that simulator-trained novice operators achieve significant improvements in CAS duration and performance, with short- and long-term results comparable to those of more experienced interventionalists. Although widely accepted for training, the concept of using simulators to evaluate performance is relatively new, with mixed results in early studies. The objective of the Assessment of Operator Performance by the Carotid Stenting Simulator Study (ASSESS) was to validate simulator-based metrics to discriminate among levels of operator experience according to the technical and cognitive skills and to evaluate simulator performance as a tool to assess CAS proficiency.

Methods

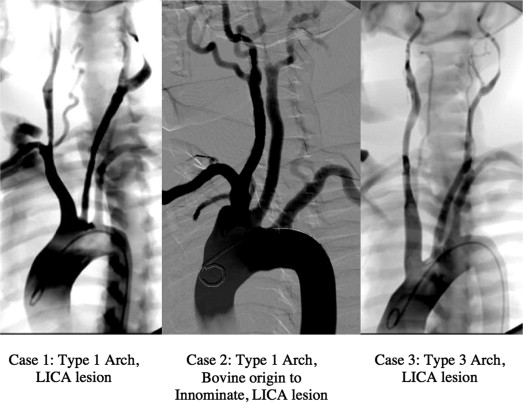

A total of 33 practicing interventional cardiologists agreed to participate in the present study. They were classified as novice, intermediate, or experienced practitioners according to their self-reported number of carotid stenting procedures performed in the year before the study. Novice participants were defined as those with no previous CAS experience, intermediate as those who had performed <20 CAS procedures, and experienced as those who had performed >40 CAS procedures. No participants had previous experience of 20 to 40 cases. Each participant was asked to perform 4 simulated carotid stenting cases. The first was a proctored introductory case to gain familiarity with the simulator and its components, followed by 3 carotid stenting procedures of increasing difficulty ( Figure 1 ). The cases and their sequence were identical for all participants. The sessions lasted no more than 120 minutes, with a 30-minute orientation and 30 minutes allotted for each case. A technician assisted each subject with the software interface and basic simulator operation but not with any clinical or interventional aspects of the procedure. The subjects were not provided with any performance reports, feedback, or scoring during the sessions.

The AngioMentor endovascular simulator (Simbionix, Cleveland, Ohio) was used in the present study. The simulator has interactive equipment mimicking a modern angiographic suite and providing realistic tactile feedback to the operator. The simulator software is able to simulate real-time fluoroscopic imaging, digital subtraction angiography, road mapping, cine-angiographic storage and retrieval, a quantitative carotid angiography measurement tool, C-arm rotation in 4 directions, and table movements. The intravascular devices used during the present study were modified versions that were virtually indistinguishable from their commercially available counterparts. The subjects were allowed to select from a broad range of generic intravascular devices, with a choice of catheter sizes and balloon and stent diameter and length. The balloons used during the procedure were inflated using a pressure-calibrated indeflator device. The stents were deployed with a modified stent deployment handle. The embolic protection device available for use during the simulation was based on the Angioguard (Cordis, Johnson & Johnson, Bridgewater, New Jersey) and required users to select an appropriate device size to match the target vessel diameter. The cases were interactive. The simulated blood pressure and heart rate changed according to the interventional procedure and required simulated treatment with pharmacologic agents to achieve an appropriate hemodynamic response to medical management.

A scoring algorithm was generated by experienced interventional cardiologists according to the metrics collected by the simulator in 3 broad categories of technical performance, medical management, and angiographic results. Each metric was assigned a score to represent the metrics’ relative clinical importance. The simulator software was automated to collect most of the objective simulator parameters required by the scoring algorithm for each case (see the Appendix ). Medical management scoring was determined according to the hemodynamic response and the dose, route, and timing of the adjunctive pharmacotherapy administered during the cases. The blood pressure and heart rate levels that deviated above or below the predefined clinically significant threshold levels for ≥60 seconds without operator attempts to correct adversely affected the scoring. The symptomatic patients and those with hemodynamic instability were characterized as having greater risk, and the cases were correspondingly graded more stringently. The preprocedure, postprocedure, and maximum activated clotting time values were the results of simulated anticoagulation administration by the operator, and the responses were incorporated into the score. Errors negatively affected scoring.

The maximum scores using the parameters applicable for each case were calculated. For each participant, the final score represented a fraction (in percentages) of the maximum possible score. Lower scores reflected better performance. The groups were compared with analysis of variance for continuous variables and the chi-square test for categorical data. Log-transformed scores were modeled with both group and case number as factors using analysis of variance. Nonsignificant interactions were removed from the analysis. Bonferroni-corrected post hoc pairwise comparisons were performed of the log-transformed scores for all 3 groups for the primary result. Kruskal-Wallis tests were used for the comparisons of the individual simulator parameters. The pairwise p values for the individual parameters were not adjusted for the multiple comparisons.

Results

The simulator-based performance of the 33 interventional cardiologists (8 novices, 15 intermediates, and 10 experts) was studied. Two thirds of the participants had previous simulator experience. The self-reported experience of the study participants is listed in Table 1 . The 33 participants completed 82 full, simulated procedures, with an average of 2.5 cases per operator. Of the participants, 3 (2 with intermediate experience and 1 expert) attempted only 2 of the 3 scored CAS cases by personal request. The results from 14 simulated procedures (7 scored CAS cases among the expert participants, 3 among the intermediates, and 4 among novices, p = 0.122) were not included because the cases were aborted by participant request (time constraints) or technical difficulties with the simulator.

| Baseline Experience | Total (n = 33) | Novice (n = 8) | Intermediate (n = 15) | Expert (n = 10) | p Value |

|---|---|---|---|---|---|

| Duration of experience (yrs) | 10 ± 9.7 | 3 ± 6.4 | 8.2 ± 9.5 | 18.3 ± 6 | 0.0009 |

| Annual coronary angiograms (n) | 308.3 ± 204.5 | 325 ± 130.9 | 241.7 ± 195 | 395 ± 245.5 | 0.18 |

| Annual percutaneous coronary interventions (n) | 172 ± 137.5 | 110 ± 116 | 165 ± 130.2 | 232 ± 151.3 | 0.17 |

| Annual diagnostic carotid angiograms (n) | 37.2 ± 52.3 | 0 ± 0 | 24.8 ± 29.6 | 85.5 ± 65.6 | 0.0003 |

| Annual carotid interventions (n) | 19.5 ± 28.5 | 0 ± 0 | 9.9 ± 15 | 49.5 ± 32.5 | <0.0001 |

| Previous simulator experience (%) | 67 | 13 | 80 | 90 | 0.0008 |

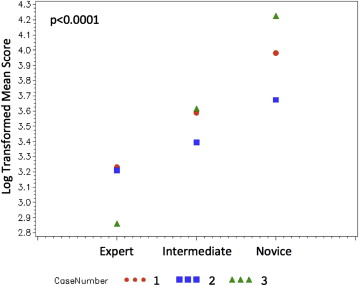

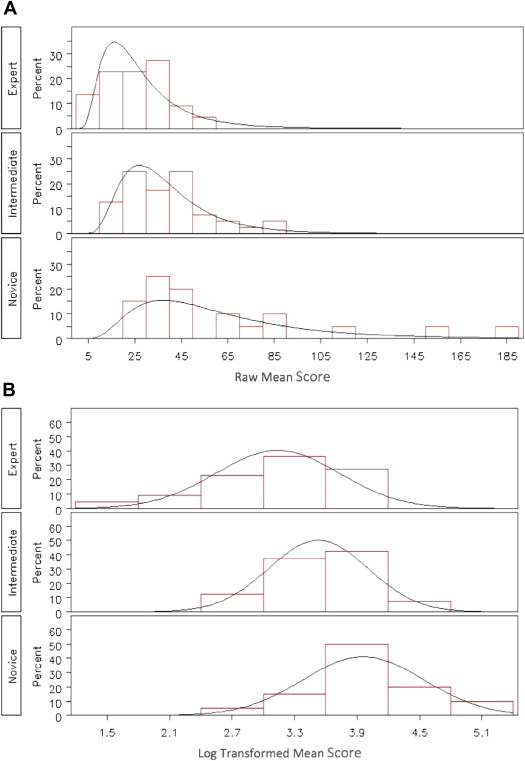

Overall, the mean raw and log-transformed composite scores for the 3 case simulations varied significantly by operator experience, with better (lower) composite scores in the more experienced groups (p <0.0001; Figure 2 and Table 2 ). Of the 3 cases, 2 were independently sufficient to differentiate operators by experience (case 1, p = 0.01; and case 3, p = 0.005). Significant skews were present in the raw score distributions, with a wider, scattered distribution in the novice group. After log transformation, the distributions in each of the 3 groups appeared to be normal ( Figure 3 ).

| Case | n | Mean Raw Score | Log Transformed Score | ||||

|---|---|---|---|---|---|---|---|

| Mean | Median | Minimum | Maximum | p Value | |||

| Overall | <0.0001 | ||||||

| Expert | 22 | 26.6 ± 12.9 | 3.1 ± 0.6 | 3.1 | 1.5 | 3.9 | |

| Intermediate | 40 | 38 ± 18.0 | 3.5 ± 0.5 | 3.6 | 2.6 | 4.5 | |

| Novice | 20 | 62.7 ± 42.7 | 4.0 ± 0.6 | 3.8 | 3 | 5.2 | |

| Case 1 | 0.01 | ||||||

| Expert | 9 | 28.7 ± 14.5 | 3.2 ± 0.6 | 3.1 | 2.3 | 3.9 | |

| Intermediate | 14 | 39.6 ± 18.0 | 3.6 ± 0.4 | 3.7 | 2.9 | 4.5 | |

| Novice | 8 | 57.4 ± 21.9 | 4.0 ± 0.4 | 4 | 3.3 | 4.5 | |

| Case 2 | 0.31 | ||||||

| Expert | 8 | 29.4 ± 13.3 | 3.2 ± 0.8 | 3.5 | 1.5 | 3.8 | |

| Intermediate | 14 | 33.0 ± 14.6 | 3.4 ± 0.5 | 3.5 | 2.6 | 4.1 | |

| Novice | 6 | 41.1 ± 13.4 | 3.7 ± 0.3 | 3.6 | 3.3 | 4.2 | |

| Case 3 | 0.005 | ||||||

| Expert | 5 | 18.2 ± 5.0 | 2.9 ± 0.3 | 3 | 2.3 | 3.1 | |

| Intermediate | 12 | 41.9 ± 21.6 | 3.6 ± 0.5 | 3.6 | 3 | 4.4 | |

| Novice | 6 | 91.2 ± 67.1 | 4.2 ± 0.9 | 4.3 | 3 | 5.2 | |

Few specific parameters contributed to the discrimination among the 3 operator experience groups ( Table 3 ). Differences in the fluoroscopy time were able to distinguish the experts from the novices (p = 0.02) and intermediates (p = 0.03). Crossing the carotid lesion with devices other than a 0.014-in. wire before filter deployment highlighted the poor performance of the novices. The inexperienced operators were more likely to cross the lesion with a guidewire or the sheath (p = 0.001, overall; p = 0.006, novices vs intermediates; and p = 0.008, novices vs experts) and with a 0.035-in. wire before filter placement (p = 0.004, overall; p = 0.02, for novices vs experts). The novices were also distinguished from the other groups by the incomplete coverage of the lesion by the stent (p = 0.017, overall; p = 0.03, novices vs intermediates; p = 0.03, novices vs experts) and by placement of the postdilation balloon outside the stent margins (p = 0.02, overall; p = 0.005, for novices vs experts). The number of diagnostic catheters used was able to discriminate between intermediate and expert participants, especially in the more complex cases but was not sufficient for overall discrimination (p = 0.08). All other specific simulator metrics were insufficient to individually stratify the operators by the expertise level.

| Simulator Metric | Novice (n = 20 cases) | Intermediate (n = 40 cases) | Expert (n = 22 cases) | p Value | |||

|---|---|---|---|---|---|---|---|

| All | Expert vs Intermediate | Expert vs Novice | Intermediate vs Novice | ||||

| Fluoroscopy time (min, mean score per case) | 14:42 ± 5:40 (0.42 ± 0.5) | 13:35 ± 4:50 (0.27 ± 0.4) | 11:06 ± 3:42 (0.09 ± 0.3) | 0.04 | 0.03 | 0.02 | 0.39 |

| Crossing lesion before filter ∗ | |||||||

| With 0.035-in. wire | 4.4 ± 7.4 | 1.1 ± 2.9 | 1.0 ± 1.7 | 0.004 | 0.48 | 0.02 | 0.002 |

| With catheter | 6.2 ± 8.3 | 0 ± 0 | 0 ± 0 | <0.0001 | 1 | 0.003 | <0.0001 |

| With guide/sheath | 3.1 ± 4.3 | 0.3 ± 1.6 | 0 ± 0 | 0.001 | 0.44 | 0.008 | 0.006 |

| Time to position guide/sheath in CCA (min) | 13:57 ± 4:31 (0.24 ± 0.43) | 14:45 ± 5:32 (0.37 ± 0.54) | 11:02 ± 5:21 (0.16 ± 0.45) | 0.046 | 0.02 | 0.045 | 0.64 |

| Incomplete coverage of lesion by stent ∗ | 0.15 ± 0.46 | 0.01 ± 0.03 | 0 ± 0 | 0.017 | 0.45 | 0.03 | 0.03 |

| Postdilation balloon extends beyond stent margins ∗ | 1.5 ± 1.8 | 0.7 ± 1.4 | 0.2 ± 0.8 | 0.02 | 0.12 | 0.005 | 0.13 |

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree