Cardiac resynchronization therapy (CRT) has emerged as an attractive intervention to improve left ventricular mechanical function by changing the sequence of electrical activation. Unfortunately, many patients receiving CRT do not benefit but are subjected to device complications and costs. Thus, there is a need for better selection criteria. Current criteria for CRT eligibility include a QRS duration ≥120 ms. However, QRS morphology is not considered, although it can indicate the cause of delayed conduction. Recent studies have suggested that only patients with left bundle branch block (LBBB) benefit from CRT, and not patients with right bundle branch block or nonspecific intraventricular conduction delay. The authors review the pathophysiologic and clinical evidence supporting why only patients with complete LBBB benefit from CRT. Furthermore, they review how the threshold of 120 ms to define LBBB was derived subjectively at a time when criteria for LBBB and right bundle branch block were mistakenly reversed. Three key studies over the past 65 years have suggested that 1/3 of patients diagnosed with LBBB by conventional electrocardiographic criteria may not have true complete LBBB, but likely have a combination of left ventricular hypertrophy and left anterior fascicular block. On the basis of additional insights from computer simulations, the investigators propose stricter criteria for complete LBBB that include a QRS duration ≥140 ms for men and ≥130 ms for women, along with mid-QRS notching or slurring in ≥2 contiguous leads. Further studies are needed to reinvestigate the electrocardiographic criteria for complete LBBB and the implications of these criteria for selecting patients for CRT.

In this review, we consider the emerging clinical evidence that patients without complete left bundle branch block (LBBB) do not benefit from cardiac resynchronization therapy (CRT) and present evidence that approximately 1/3rd of patients diagnosed with LBBB by conventional electrocardiographic (ECG) criteria do not actually have complete LBBB but rather likely have a combination of left ventricular hypertrophy (LVH) and left anterior fascicular block. We present criteria for the diagnosis of complete LBBB that include a negative terminal deflection in lead V 1 (QS or rS), a minimum QRS duration of 140 ms in men and 130 ms in women, and the presence of mid-QRS notching or slurring. These criteria will require further validation, along with testing their ability to identify appropriate candidates for CRT.

Cardiac Resynchronization Therapy

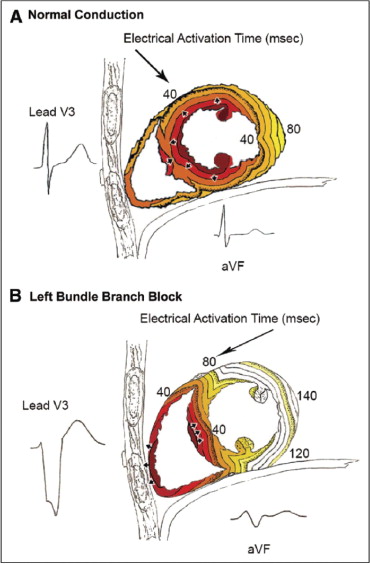

In the presence of complete LBBB, there is a significant delay between activation of the interventricular septum and activation of the left ventricular (LV) free wall ( Figure 1 ). Thus, decreasing the delay by simultaneous pacing of the septum and LV free wall may resynchronize mechanical contraction. However, in the presence of QRS prolongation due to right bundle branch block (RBBB) or LVH, the LV endocardium is activated normally via the rapidly conducting Purkinje system.

The major randomized clinical trials that led to the widespread adoption of CRT used a prolonged QRS duration ≥120 ms but did not select patients on the basis of QRS morphology, which can usually determine the cause of the prolonged ventricular depolarization. In the Comparison of Medical Therapy, Pacing, and Defibrillation in Heart Failure (COMPANION) trial, patients without LBBB did not have a statistically significant benefit, and those with QRS durations ≤147 ms had absolutely no benefit. A pooled analysis of 2 smaller trials found no significant improvement in the LV ejection fraction or maximal oxygen consumption in patients with RBBB. A recent report on 14,946 Medicare patients receiving CRT showed that those without LBBB had significantly increased early and late mortality compared to patients with LBBB, and QRS duration ≥150 ms predicted more favorable outcomes in LBBB but not in RBBB. Although these studies involved patients with New York Heart Association class III and IV heart failure), the Multicenter Automatic Defibrillator Implantation Trial–Cardiac Resynchronization Therapy (MADIT-CRT) trial enrolled patients with New York Heart Association class I and II heart failure and QRS durations ≥130 ms. The presence or effect of LBBB versus RBBB was not reported in the original publication; however, patients with QRS durations <150 ms received no benefit. Subsequent analysis showed that patients with LBBB receiving CRT had a very significant 55% decrease (hazard ratio [HR] 0.45, p <0.05) in heart failure hospitalizations or mortality, while patients without LBBB had a non–statistically significant increase in these adverse events. Additional analysis also considered thresholds of QRS duration separately for men and women. The benefit from CRT was highly significant in women beginning at a QRS duration ≥130 ms (QRS duration 130 to 139 ms HR 0.19, p <0.05), but there was no benefit in men with QRS durations <140 ms (HR 1.12, p >0.05) and a nonsignificant benefit in the group with QRS durations from 140 to 159 ms (HR 0.76, p >0.05). Although response to CRT goes beyond the restoration of electrical synchrony and cannot be used as proof of concept, these analyses agree with our proposed criteria that the minimum QRS duration threshold for defining complete LBBB in women is 130 ms and in men is 140 ms.

Early History of Defining Bundle Branch Block

Eppinger and Rothberger created the first experimental bundle branch block in dogs in 1909 by injecting silver nitrate into the left ventricle. In 1914, Carter published the first series of patient electrocardiograms with bundle branch block and observed that RBBB was more common than LBBB, but he mistakenly switched the diagnosis of LBBB and RBBB, which was later attributed to differences in heart position between the dog and the human. In 1920, Wilson and Herrmann presented criteria for bundle branch block, observing that the QRS duration was usually much more than 100 ms and often >150 ms. They commented on the frequent notching within the QRS complex but continued to switch the diagnosis of LBBB and RBBB. That same year, Oppenheimer and Pardee published 2 autopsy cases of bundle branch block and proposed that the diagnosis of LBBB and RBBB had been reversed in patients. The debate was settled in 1930, when Barker et al performed electrical stimulation of the epicardium of the human heart to show that the definitions of LBBB and RBBB had been reversed. This bundle branch block problem led to a renewed interest in precordial leads to aid in diagnosis.

Wilson proposed more detailed definitions of bundle branch block in 1941, after the wide availability of precordial leads. He observed in a dog model of LBBB that leads V 1 and V 2 showed rS complexes and leads V 5 and V 6 showed single broad, notched R deflections. Wilson stated that when precordial leads were not available, the key to distinguishing “complete bundle branch block” from “incomplete bundle branch block” and other disturbances of intraventricular conduction was having a QRS duration ≥120 ms. Thus, the threshold of 120 ms was established on the basis of pattern recognition comparing dogs to humans, not on objective measurements in humans. It should be noted that the concept of left anterior or posterior fascicular block had not been developed at this time, and it was not appreciated in the literature that LVH could prolong QRS duration beyond 120 ms.

Early History of Defining Bundle Branch Block

Eppinger and Rothberger created the first experimental bundle branch block in dogs in 1909 by injecting silver nitrate into the left ventricle. In 1914, Carter published the first series of patient electrocardiograms with bundle branch block and observed that RBBB was more common than LBBB, but he mistakenly switched the diagnosis of LBBB and RBBB, which was later attributed to differences in heart position between the dog and the human. In 1920, Wilson and Herrmann presented criteria for bundle branch block, observing that the QRS duration was usually much more than 100 ms and often >150 ms. They commented on the frequent notching within the QRS complex but continued to switch the diagnosis of LBBB and RBBB. That same year, Oppenheimer and Pardee published 2 autopsy cases of bundle branch block and proposed that the diagnosis of LBBB and RBBB had been reversed in patients. The debate was settled in 1930, when Barker et al performed electrical stimulation of the epicardium of the human heart to show that the definitions of LBBB and RBBB had been reversed. This bundle branch block problem led to a renewed interest in precordial leads to aid in diagnosis.

Wilson proposed more detailed definitions of bundle branch block in 1941, after the wide availability of precordial leads. He observed in a dog model of LBBB that leads V 1 and V 2 showed rS complexes and leads V 5 and V 6 showed single broad, notched R deflections. Wilson stated that when precordial leads were not available, the key to distinguishing “complete bundle branch block” from “incomplete bundle branch block” and other disturbances of intraventricular conduction was having a QRS duration ≥120 ms. Thus, the threshold of 120 ms was established on the basis of pattern recognition comparing dogs to humans, not on objective measurements in humans. It should be noted that the concept of left anterior or posterior fascicular block had not been developed at this time, and it was not appreciated in the literature that LVH could prolong QRS duration beyond 120 ms.

Challenges to the Definition of Left Bundle Branch Block

In 1956, Grant and Dodge studied 128 patients with QRS durations ≥120 ms and LV conduction delays who had an electrocardiograms showing normal conduction within the previous 2 years (<6 months in 80%). Their conclusion was that “the classical explanation of LBBB, which is based upon animal experimentation, is only partially accurate when applied to human LBBB,” and they proposed that 1/3 of electrocardiograms classified as LBBB by conventional criteria were incorrectly diagnosed. This was based on 2 observations: the QRS duration prolongs by significantly more than 40 ms with the development of LBBB, and the initial electrical forces must change with the development of LBBB because of the different activation of the septum.

Grant and Dodge noted that although it was believed that the QRS duration becomes prolonged by 40 ms with the development of LBBB on the basis of excitation in the dog, they observed that the QRS duration often was prolonged by 70 to 80 ms. As shown in Figure 1 , LBBB electrical activation must proceed through the interventricular septum from the RV endocardium to the LV endocardium. This requires a minimum of 40 ms. It requires an additional 50 ms for the electrical activity to reach the posterolateral wall. It then requires another 50 ms to completely activate the posterolateral wall. This produces a total QRS duration of ≥140 ms. The QRS is prolonged by ≥60 ms beyond normal conduction (not just the 40 ms it takes to proceed through the septum) because in LBBB, the electrical depolarization is not in the rapidly conducting Purkinje system when it reaches the LV endocardium.

In normal conduction, electrical activation of the LV overshadows activation of the smaller RV in the generation of the QRS complex, and in the first 30 to 40 ms a large portion arises from left-to-right activation of the septum ( Figure 1 ). Grant and Doge noted that with the onset of LBBB, the direction of electrical forces (and QRS morphology) on the electrocardiogram must change in the initial 30 to 40 ms, because now only the RV is activating, and the septum is being activated from right to left. However, in their series of 128 patients who developed LBBB by conventional ECG criteria, 51 (40%) had no change in the direction of initial QRS forces. This suggests that complete LBBB did not produce the QRS prolongation in these patients. A recent study suggested that R wave ≥0.1 mV in lead V 1 may exclude the diagnosis of LBBB, because the V 1 R wave is a sign of intact left-to-right septal activation. This logic is sound, however patients with LBBB and septal infarction or scar develop large R waves in lead V 1 from unopposed RV free wall activation.

We have included an example of a patient ( Figure 2 ) with LBBB by conventional ECG criteria (including QRS duration of 142 ms), who actually had slow development of QRS prolongation at a rate of 6 ms/year documented by 42 ECG studies performed over a 6.5-year period, which is not consistent with LBBB. Note the nearly identical initial forces and QRS morphology as the QRS interval prolongs in subsequent ECG studies. In contrast, Figures 3 and 4 show examples of patients who developed complete LBBB with sudden prolongations of the QRS interval from 76 to 148 ms and from 92 to 156 ms, respectively. These patients had notable changes in initial forces and QRS morphology. Below, we present specific criteria to diagnose complete LBBB in the absence of serial recordings, which includes the presence of mid-QRS notching or slurring.